The capabilities of modern AI are often paradoxical: they can prove mathematical theorems and write complex code, yet fail spectacularly at puzzles any middle schooler could solve. This “jagged frontier” of AI capabilities—where peaks of brilliance tower next to valleys of incompetence—is not just an academic curiosity. It is a ticking time bomb for enterprises betting their future on AI deployment. In this post, we explore Salesforce's SIMPLE benchmark, Enterprise Bench dataset, and the controversy around Apple's "Illusion of Thinking" paper, showing that the current AI progress is indeed highly uneven, and it is hard to measure it correctly. LLMs are genuinely capable systems with bizarrely distributed competencies. For organizations navigating this landscape, success requires careful mapping of where AI excels and where it fails catastrophically.

Introduction

So the sense of smell, this is one thing that humans have, and there’s a metaphorical mathematical smell that it’s not clear how to get the AI to duplicate that eventually… If they can pick up a sense of smell, then they could maybe start competing with a human level of mathematicians.

Terence Tao

on Lex Fridman Podcast #472, June 14, 2025

Modern LLM-based AI systems can prove novel mathematical theorems, write flawless code for complex algorithms, and engage in nuanced philosophical discussions. However, if you ask that same LLM to solve a variation of the fox-chicken-corn river crossing puzzle that any bright middle schooler could figure out in seconds, it fails spectacularly. Why?

These effects, happening right now with our most advanced AI systems, can reveal something profound about the nature of machine intelligence. We have built intellectual giants with feet of clay—systems that excel at the extraordinary while stumbling over the ordinary.

This strange asymmetry is not just an academic curiosity. As enterprises try to deploy AI for everything from customer service to financial analysis, these “mundane” failures can turn into ticking time bombs. When your AI can discuss quantum mechanics but cannot correctly and, most importantly, reliably route a customer complaint, your AI-based tool can turn into a serious liability any second.

Researchers call this effect the “jagged frontier” of AI capabilities. Unlike the smooth progression we might expect—where harder tasks are solved after easier ones—the landscape of AI competence looks more like a ragged mountain range. Peaks of brilliance tower next to valleys of incompetence, with no apparent logic to the topology.

In this post, we explore three recent developments that illustrate this jaggedness: Salesforce's SIMPLE benchmark that systematically exposes how frontier models fail on trivial tasks, the ambitious but troubled Enterprise Bench that attempts to measure real-world readiness, and the delightfully meta controversy around Apple's "Illusion of Thinking" paper—a saga that has spawned rebuttals with AI co-authors and revealed as much about our evaluation methods as about AI limitations.

Most importantly, we will examine what this jaggedness means for the immediate future of AI deployment. Because while researchers debate whether these systems truly “understand” anything, enterprises need to know one thing: will it work reliably enough to bet their business on it?

The answer, as we'll see, is both more complex and more urgent than either the optimists or pessimists would have you believe.

SIMPLE: A Benchmark Born of Frustration

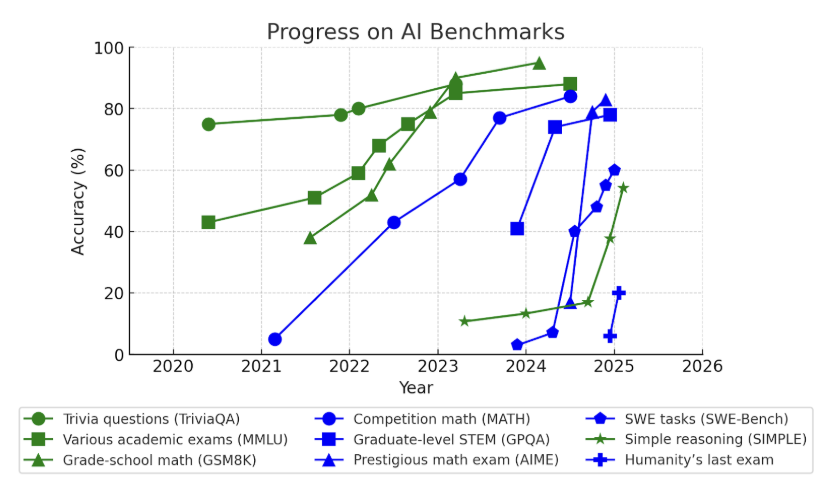

For a long time, researchers have talked about the “jagged technological frontier”, a term used to describe different effects of using AI models in different jobs, even if we restrict the context to white-collar knowledge workers. But the full scale of “intelligence jaggedness” is not limited to the trivial observation that AI will not fulfill all tasks at exactly the same time with exactly the same quality. In fact, we routinely encounter general-purpose AI models that ace high-level complex benchmarks yet often fail on trivial tasks which humans handle effortlessly.

Recently, Salesforce AI Research decided to systematically expose this inconsistency (Ginart et al., 2025). The researchers created SIMPLE (Simple, Intuitive, Minimal Problem‑solving Logical Evaluation), a publicly released benchmark of 225 short puzzles. Each item is intentionally easy for humans—expected to be solved by at least 10–15% of high‑schoolers within an hour of pen and paper. It’s worth noting how our definition of “human-easy” has shifted dramatically over the last couple of years: in 2022, frontier LLMs could maybe solve middle school math text problems with some degree of consistency...

Rather than chasing “state‑of‑the‑art” brilliance, SIMPLE asks: can frontier models even handle everyday logic robustly? That shift in perspective—studying failure on simple tasks—is key for enterprise-grade reliability.

The puzzles include classic logic scenarios with slight twists. Here is a popular example from SIMPLE:

A man has to get a fox, a chicken, and a sack of corn across a river. He has a rowboat, and it can only carry him and three other things. If the fox and the chicken are left together without the man, the fox will eat the chicken. If the chicken and the corn are left together without the man, the chicken will eat the corn. How does the man do it in the minimum number of steps?

You can easily recognize the classic puzzle, and LLMs can too. But in the classic version, the boat carries only one item, which leads to a multi-step logic solution. Here, the boat can carry all three at once, and the problem trivializes: you just carry over everything in one step. Remarkably, many LLMs, even large reasoning models, still output the 7‑step classic solution—demonstrating a failure to adapt to small but crucial changes in the prompt.

Salesforce benchmarked models like GPT o1, o3-mini-high, DeepSeek’s R1, and other frontier reasoning‑style systems (e.g., chain‑of‑thought–enabled agents). These models excel at Olympiad math and abstract coding; yet on SIMPLE they’re scoring only 70–80 %, with some open models falling to ~60%. In effect, we have exactly the “idiot savant” situation: models that are brilliant at top‑end benchmarks but slipping on everyday logic.

This is not mere random hallucination—it’s systematic misinterpretation caused not by complexity but by over-reliance on prototypical narratives embedded in training data. Models keep reproducing classic answers despite contradictions in the prompt, sometimes reorder logical steps, often making inference leaps and leaving gaps that even a middle‑schooler would not.

Basically, it’s a case of overfitting to latent “logic stereotypes” and reasoning templates present in the training set. But in this case overfitting is so deeply ingrained that it’s really not clear how to “regularize” against it. SIMPLE results also vary wildly with prompt phrasing. Even models at 80% accuracy with one template can vary ±10 percentage points in accuracy with a different prompt. This prompt brittleness underscores how fragile the reasoning of frontier models still remains.

How else can we measure the jagged frontier of AI model capabilities?

Enterprise Bench: When LLMs Meet the Corporate World

While SIMPLE exposes failures on toy problems, the real test comes when AI meets the messy reality of corporate life. Failures on simple tasks may prove dangerous in the real world. Most enterprise tasks—invoice triage, meeting-note summary, routing an account inquiry—are not about graduate-level science reasoning or mathematical proofs, but they demand precision, robust understanding, and consistent reading of context.

If a model fails one in ten times on a simple task, this will be catastrophic at scale. The users do not care about the models scoring high on math Olympiads, they care about not screwing up the meeting notes today. Enterprises have vastly different risk tolerance than academic labs. A logic puzzle mistake might seem cute, but in production, a single misassignment of credit or policy status can mean customer loss or breaches in compliance. Jagged intelligence erodes trust—which is far more damaging than minor inaccuracies.

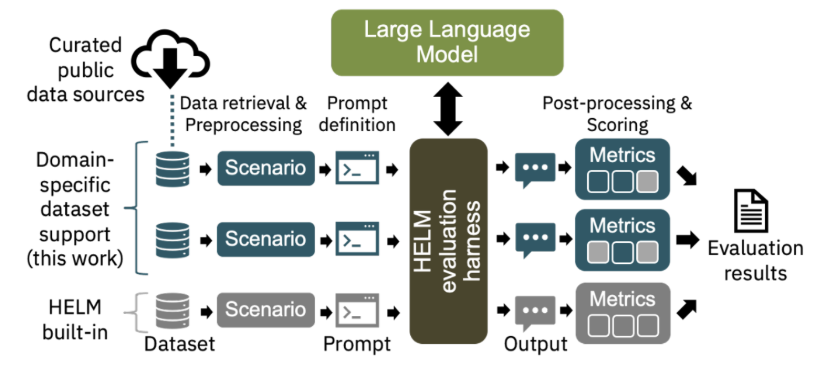

To bridge this gap, Zhang et al. (2024), in a collaborative effort from IBM Research, Adobe, the MIT-IBM Watson AI Lab, and Stanford, developed Enterprise Bench, a systematic attempt to drag LLM evaluation out of the ivory tower and into the corner office.

The fundamental goal of Enterprise Bench is refreshingly straightforward: to benchmark enterprise datasets for various tasks. The researchers identified a problem in the LLM ecosystem: while standard benchmarks like MMLU, Big-Bench, and HELM may be doing a great job of testing general capabilities, they are essentially giving LLMs the equivalent of a liberal arts education when what enterprises needed were MBAs, JDs, and domain-specific expertise. The proposed evaluation framework encompasses 25 publicly available datasets from diverse enterprise domains like financial services, legal, cyber security, and climate and sustainability.

Enterprise Bench extends Stanford's HELM (Holistic Evaluation of Language Models) framework, and the overall evaluation structure is quite straightforward:

Let me give a few examples of the actual tasks that comprise Enterprise Bench. It is a very diverse dataset, covering several domains:

- financial domain with sample tasks like the following:

- earnings call sentiment analysis: models must classify whether statements from earnings calls are positive or negative; it sounds simple until you realize that "We're exploring new opportunities for growth" could mean either "We're innovating" or "Our current business is tanking";

- KPI-Edgar challenge: extract key performance indicators (KPIs) and values from SEC filings, then categorize them correctly; this involves parsing obscure statements like "Our EBITDA, excluding one-time charges related to our strategic realignment initiative, increased by 3.2% YoY to $847.3M”;

- ConvFinQA: multi-turn conversational question answering involving numerical reasoning, like teaching an AI to be a financial analyst able to discuss the details with a CFO;

- legal domain, where subsets include

- UNFAIR-ToS: identifying unfair contractual terms in terms of service documents; here the model needs to spot when companies are essentially saying "We can change anything, anytime, and you can't do anything about it" in legalese;

- CaseHOLD with multiple-choice questions about legal holdings, similar to law school exams;

- climate and sustainability domain with, e.g., the Reddit Climate Change Classification subset of sentiment analysis on climate discussions;

- cybersecurity with CTI-to-MITRE, i.e., mapping cyber threat intelligence to the MITRE ATT&CK framework, and so on.

So Enterprise Bench is varied and covers many important enterprise-level tasks. It sounds like a great idea to bridge the gap straight to enterprise applications—so why hasn’t it caught up? Enterprise Bench has been integrated into the broader HELM ecosystem, with IBM contributing the scenarios to HELM for future use. I won’t quote the evaluation tables from Zhang et al. (2024) because they are hopelessly outdated now, nine months after the paper’s release. But I couldn’t even find a public leaderboard with Enterprise Bench, let alone some kind of viral sensation in the AI community (we’ll get to those later).

There are several straightforward reasons. First, the “public dataset paradox”: enterprise datasets, though potentially useful as benchmarks, often face accessibility or regulatory issues. Research has to rely on publicly available datasets, which is a bit like training for a Formula 1 race using go-karts. Real enterprise data is often proprietary, sensitive, and wrapped in tight NDAs.

Second, there are some deficiencies in Zhang et al.’s methodology: for example, the same prompts are used for evaluating all models and scenarios. This one-size-fits-all approach is acknowledged as a limitation by the authors themselves, and of course, it would be natural to tailor prompts to different use cases. It's like giving everyone the same interview questions regardless of whether they're applying to be a CEO or a barista. Some have pointed out that competing benchmarks in the financial domain, like FinBen (Xie et al., 2024), have a separate subset of Chinese language tasks, and Enterprise Bench's English-only approach may be a weakness in our increasingly globalized economy.

But these shortcomings are far from unfixable. There seems to be a clear gap in the benchmarks that reflect actual real life job assignments—not creative assignments for a professional mathematician, but for routine white-collar work that every one of us would love to automate away.

Acknowledging this, in the second half of this post I want to pivot to a different example. Perhaps the most revealing window into AI's jagged capabilities came from an unexpected source: a failed attempt to prove that reasoning models can't truly think. This is a tale of an experiment that was intended as a proof that modern large reasoning models can never achieve AGI and highlight just how jagged the frontier actually is. Let’s see how it turned out.

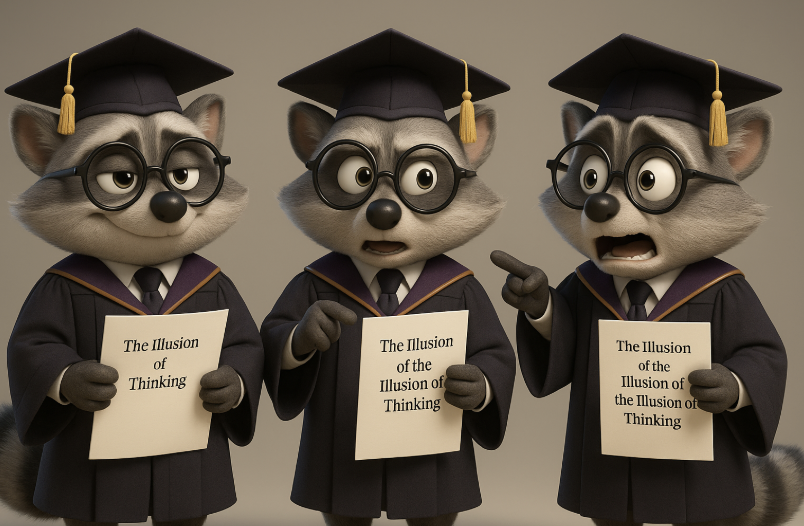

The Illusion of The Illusion of The Illusion of Thinking

This is not a quirky title that I have just invented to highlight a recent controversy. It's an actual title of an arXiv preprint (Pro, Dantas, June 26, 2025) that represents a recent academic debate in the AI community in June 2025. What started as Apple's critique in a serious research paper has spawned a cascade of increasingly baroque rebuttals, counter-rebuttals, and what might be the first AI-co-authored response paper to go viral—though, as we'll see, this also comes with a rather large asterisk.

The controversy began in early June, when Apple researchers Shojaee et al. (June 7, 2025) dropped what many considered a bombshell. Their paper, titled "The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity," presented evidence that large reasoning models (LRMs) face a "complete accuracy collapse" beyond certain complexity thresholds. The title alone was provocative enough: here was Apple—a company not particularly known for deflating AI hype—essentially arguing that the emperor of reasoning models had no clothes.

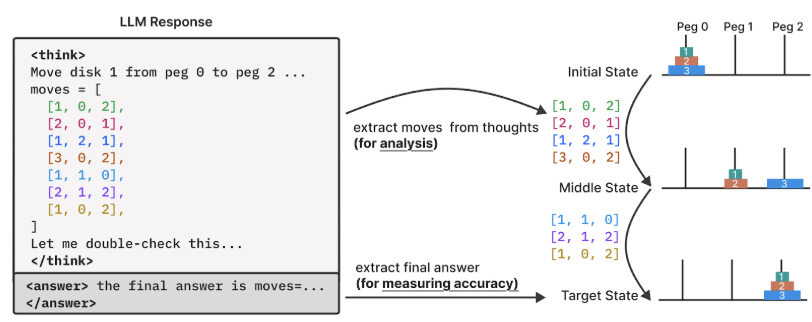

Their methodology was to discard the standard mathematical benchmarks (which, the authors argue, often suffer from data contamination) and turn to classic computer science puzzles: Tower of Hanoi, Checker Jumping, River Crossing, and Blocks World. E.g., in the Tower of Hanoi puzzle the LLM was asked to produce a sequence of moves that solves the problem for a given number of disks:

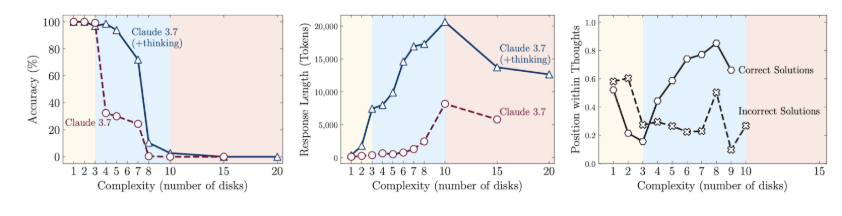

These puzzles allow to systematically scale complexity while maintaining consistent logical structures. The experiments revealed three distinct performance regimes: (1) low-complexity tasks where standard models (surprisingly) outperformed LRMs, (2) medium-complexity tasks where additional thinking in LRMs does bring significant advantages, and (3) high-complexity tasks where both models experience complete collapse.

Shojaee et al. also observed a counter-intuitive scaling limit: reasoning effort increases with problem complexity up to a point, then declines despite having an adequate token budget. It was as if the models were giving up earlier rather than struggling harder with more difficult problems. In the plot below, we can see how Claude 3.7 tends to fixate on an early wrong answer for the more difficult cases:

Overall, the paper concluded that current LRMs do not have generalizable reasoning capabilities beyond a certain complexity threshold, and there is likely an “inherent compute scaling limit” in reasoning models. Moreover, giving the models an algorithm in the prompt also didn’t help: if you give a reasoning LLM the actual algorithm for solving the Tower of Hanoi, so that it only needs to trace the steps, it will fail to do so at about the same problem complexity!

All of this sounds like quite a counterexample to the might of current LRMs. The paper immediately circulated widely among the machine learning community, and many readers' initial reactions were to declare that Apple had effectively disproven much of the hype around this class of AI. Some saw vindication of long-held skepticism about LLM reasoning capabilities.

But in fact, the methodology of Shojaee et al. (2025) was extremely strange. This was pointed out by many commenters, but let me concentrate on the second step in the meta-ladder: the paper titled "The Illusion of the Illusion of Thinking" (Opus, Lawsen, June 10, 2025). The authorship was listed as 'C. Opus, Anthropic' and 'A. Lawsen, Open Philanthropy'—the first, positioned as lead author, being a reference to Claude Opus, which is in itself an LRM. Yes, this is the world we live in: an AI system is listed as the lead author of a paper critiquing research about AI systems' inability to think. This authorship probably broke some rules, so the current version of the paper on arXiv lists only Alex Lawsen—but you can always access v1.

Claude and Lawsen argued that Apple's findings reflect experimental design limitations rather than fundamental reasoning failures. They identified three critical issues.

First, the Tower of Hanoi experiments systematically exceeded model output token limits at reported failure points, with models explicitly acknowledging these constraints in their outputs! Solving a 15-disk Tower of Hanoi puzzle takes 32,767 moves. Models were hitting their token ceilings and explicitly stating things like “The pattern continues, but I'll stop here to save tokens”—and yet the automated evaluation framework marked these as failures. It’s like giving middle school students an exam of multiplying two 1000-digit numbers on a piece of paper: sure they will fail, but not for the lack of understanding.

Second, and perhaps even more embarrassingly, the River Crossing benchmarks included mathematically impossible instances for N ≥ 6 due to insufficient boat capacity. As Opus and Lawsen put it, this was “equivalent to penalizing a SAT solver for returning 'unsatisfiable' on an unsatisfiable formula”.

Third, when the authors tested an alternative approach—requesting generating functions instead of exhaustive move lists—preliminary experiments across multiple models indicated high accuracy on Tower of Hanoi instances previously reported as complete failures. In other words, when you ask the models to demonstrate understanding rather than brute-force enumeration, they perform just fine; of course Claude Opus and o3 know the algorithm for solving the Tower of Hanoi (which is, in my opinion, why giving them the algorithm doesn’t help).

The AI community's reaction to this AI-authored rebuttal was complicated. Many—myself included—tend to agree with these comments. Some decided to stick with the paper and critique the critique. And indeed, the Opus and Lawsen text contained some rather obvious mistakes (see, e.g., here). Moreover, Alex Lawsen later wrote a post titled “When Your Joke Paper Goes Viral”, where he revealed that the whole thing began as something of a lark: "I thought it would be funny to write a response paper 'co-authored' with Claude Opus. So I wrote down the problems I'd noticed, gave them to Claude, went back and forth a bit, did a couple of quick experiments... threw together a PDF and shared it in a private Slack with some friends". It was never intended to be more than a joke saying that “even Claude can find serious issues with your paper”.

But whenever people tried to defend the original paper rather than find flaws in Opus, Lawsen (2025), they failed. Let me highlight Gary Marcus’ post that lists several arguments against the rebuttals… but fails to answer the actual contents of the rebuttals, and also mixes in strawmen arguments like “the paper’s lead author was an intern”. To me, it clearly looks like the Apple Research paper is very flawed, but Marcus keeps doubling down on the critiques being almost a general counterargument against the LLMs ever achieving. He even published an opinion piece for The Guardian titled “When billion-dollar AIs break down over puzzles a child can do, it’s time to rethink the hype”. Which is, of course, intended to generate a mini-hype wave of its own, in the opposite direction.

But still, there’s more. On June 16, a third paper emerged, "The Illusion of the Illusion of the Illusion of Thinking", this time co-authored by G. Pro and V. Dantas. At this point, one begins to wonder if we're witnessing the birth of a new academic tradition where each critique spawns a meta-critique, enabled by LLMs, until we achieve some sort of scholarly singularity.

This tertiary analysis argued that while Opus and Lawsen correctly identify critical methodological flaws that invalidate the most severe claims of the original paper, their own counter-evidence and conclusions may oversimplify the nature of model limitations. Pro and Dantas took a more nuanced view: "By shifting the evaluation from sequential execution to algorithmic generation, their work illuminates a different, albeit important, capability". Yes, the models can generate a recursive function for the Tower of Hanoi—but this demonstrates an ability to retrieve and represent a compressed, abstract solution. It does not, however, prove that the model could successfully execute or trace the 32,767 moves required for N = 15 without error if given an unlimited token budget. It's the difference between knowing the recipe and actually baking the cake.

So sure, Shojaee et al. (2025) does have irrecoverable flaws. Our evaluation methods need to be as sophisticated as the systems we're trying to evaluate. And perhaps, just perhaps, we should check whether our test puzzles are actually solvable before declaring that AI can't solve them. But does this work still point at some real limitations that tell us something new about modern AI systems? Are there real lessons to be learned here?

For a much deeper discussion, let me recommend this post by Lawrence Chan. He puts the whole thing in the context of longstanding discussions on the limits of AI systems and neural networks specifically, from Minsky and Papert’s book criticising perceptrons to arguments from computational complexity (Chiang et al., 2023), the ARC-AGI dataset, and much a simpler argument in the vein of Shojaee et al. (2025) that LLMs cannot multiply 15-digit numbers. Then he proceeds to explain why some specific critiques are wrong, and the rest are not novel.

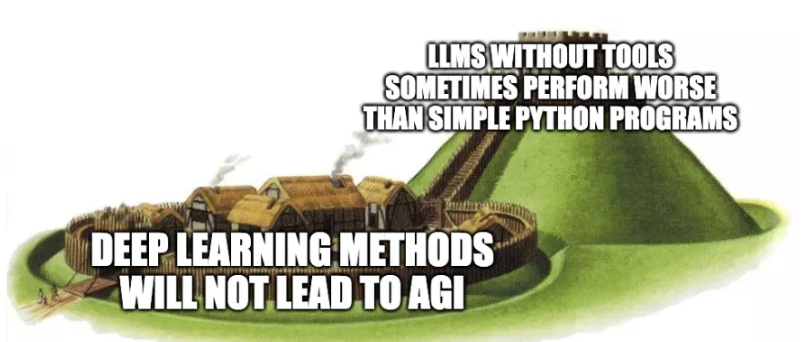

And yes, he made a meme to explain why (the Gary Marcus’ interpretation of) Apple’s paper is wrong. The motte-and-bailey variety:

So overall, while I wanted to cover Shojaee et al. (2025) because of all the recent hype, ultimately it is not a good example of the jagged frontier of AI. LLMs certainly can solve the Tower of Hanoi, in exactly the same way as a competent human would: by writing a script to produce the solutions for any n that your computational budget will allow. This has not been a failure of LRMs, even though some people desperately want it to look like one.

Towards Smoother Intelligence: What Can We Do?

In this post, we have seen three revealing snapshots of AI's jagged frontier. SIMPLE showed us that our most advanced models can fail spectacularly on puzzles that wouldn't stump a middle schooler. Enterprise Bench highlighted the gulf between academic benchmarks and real-world reliability. And the Apple paper controversy—complete with its AI-authored rebuttal—demonstrated that even our methods for measuring AI capabilities can themselves be deeply flawed.

AI systems are simultaneously more capable and more limited than popular narratives suggest. They are not the uniform progression toward AGI that some evangelists promise, nor are they the clever pattern-matchers that skeptics dismiss. They are systems with genuine but very unevenly distributed competencies.

This jaggedness is not just a temporary bug to be patched in the next model release. It appears to be a fundamental characteristic of how current AI systems learn and represent knowledge. Perhaps this is the effect well known to historians: if something is commonplace people don’t write about it, and it is hard for historians to figure out the most mundane things. Similarly, when a model trains on internet-scale data, it develops deep grooves of competence in well-represented domains while leaving vast territories of “obvious” reasoning barely touched.

So how can we adapt? I can outline a few best practices that may alleviate the jaggedness in practice, although fully solving it still remains an open research problem:

- benchmark before shipping: include SIMPLE or other jagged-style benchmarks into internal evaluation suites, and go back to prompt engineering, example-based prompting, or tool augmentation if the models fail them;

- adapt with retrieval: simple puzzles usually need context-binding, so adding RAG to ground each answer in explicit, relevant data (e.g., retrieving rulebooks or FAQ content) can often reduce hallucination and reliance on pattern-matching;

- delegate to tools: instead of asking the model to reason, you can often delegate reasoning to system tools, e.g., by using counting constraints, step-by-step validator scripts etc. triggered by function-calling;

- compare results in an ensemble: use multiple reasoning methods and output the result only if consensus is high; when disagreements appear, trigger fallback modes;

- validate outputs: write programmatic checks to validate outputs such as consistency checks or constraint verifiers.

Jaggedness is not just a quirk, a jailbreak, or a cute harmless example. It shows that the edge between “brilliant AI” and “brittle liability” is very thin in practice. By focusing early on SIMPLE-style testing, combined with prompt hygiene, retrieval, tool orchestration, and output validation, we can start turning intelligent but jagged models into tools reliable enough for the workplace.

I find it telling that AI researchers haven’t taken the time to fill in these gaps and make new benchmarks that would be more faithful to real life enterprise problems. It certainly doesn’t sound like an impossible task, and it lies straight in the middle of the path towards “eliminating half of all entry-level white-collar jobs”, as Dario Amodei recently predicted.

As Terence Tao suggested in the opening quote, perhaps what these systems lack is something like "mathematical smell"—an intuitive sense for what matters, what's reasonable, what's worth pursuing. But maybe that's the wrong metaphor. Maybe what they lack isn't a missing sense but a different kind of architecture altogether, one that builds understanding from the ground up rather than pattern-matching from the top down. Until we figure that out, we face a practical challenge: how to use these brilliant but brittle systems without letting their brittleness break things that matter.

Welcome to the age of jagged intelligence. Bring a good map.

.svg)