Today, we explore three promising architectural innovations that may take AI beyond the limitations of Transformers: diffusion-based LLMs that generate text in parallel for greater speed and control, Mamba's state space models that efficiently handle million-token contexts without quadratic scaling, and Titans with explicit memory that can learn new information at inference time. While Transformers remain dominant today, these emerging architectures address fundamental constraints around context length, generation speed, and memory persistence. This can translate directly to business advantages: reduced latency, lower compute costs, and AI systems capable of deeper reasoning across broader contexts.

Introduction

In the world of AI, Transformers have become the undisputed champions, the workhorses powering everything from chatbots to code assistants to your company's newest AI initiative. Since their introduction in 2017, these architectures have scaled to breathtaking heights, from GPT-4 to Claude to Gemini to reasoning models such as o1 pro and DeepSeek-R1, reshaping entire industries along the way.

But what if I told you we are just getting started?

The Transformer architecture, revolutionary as it is, comes with inherent limitations. Its infamous quadratic scaling with context length means that processing book-length texts remains computationally expensive. Its lack of persistent memory forces it to squeeze all knowledge into fixed parameters. And its rigid sequential generation restricts both speed and flexibility.

These are not just academic concerns—they translate directly to business limitations: higher compute costs, slower inference times, and constrained capabilities. The language models we rely on today are remarkable, but they are far from the final word in AI architecture.

In this post, we will try to go beyond the Transformer paradigm to explore three promising architectural innovations that could power the next generation of language models: diffusion-based LLMs that generate text in parallel rather than sequentially, Mamba's state space models that efficiently process million-token contexts, and Titans with explicit memory that can learn new information on the fly.

While these are all exciting novel research directions, and a lot of kinks still remain to be ironed out, they are not just interesting academically—they represent potential competitive advantages for companies that understand and adopt them early. Let's dive in!

Diffusion-Based LLMs

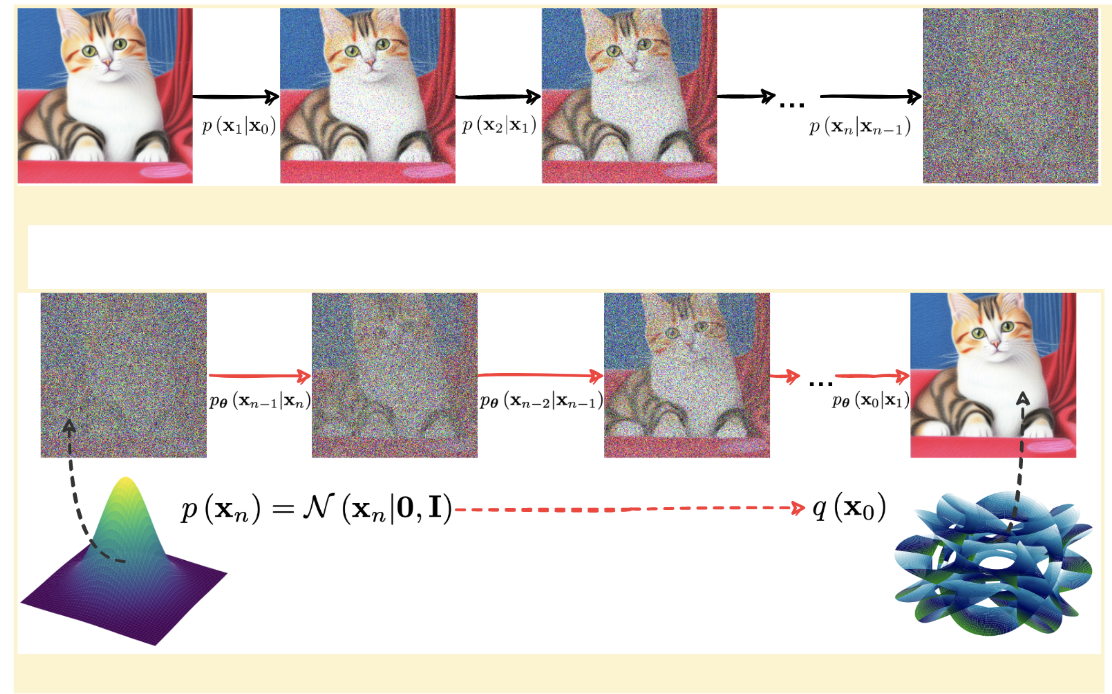

You have no doubt heard about diffusion-based models for image generation (Stable Diffusion, FLUX, various versions of DALL-E and Midjourney—all of them are diffusion-based). They create images by learning a denoising transformation, so on inference, the model starts with random noise and gradually denoises it into a picture (image source):

Now imagine the same, but for text: a model writing an email by refining an initial jumble of random words! Sounds very strange but it is indeed the idea at the heart of diffusion-based large language models (dLLMs), a new class of models that have been around since 2022 (Li et al., 2022) but hit the news again in February with LLaDA (Nie et al., 2025).

Traditional large language models, such as GPT, generate text autoregressively, that is, sequentially, token by token. This approach has been with us since the very first Markov chain language model published by A.A. Markov himself in 1913, and it has carried us through to the current crop of reasoning models. While effective (and, with the help of KV-caching, even efficient), autoregressive generation has limitations—it moves strictly forward, without revisiting or revising earlier choices. In contrast, diffusion models start with an initial sequence filled with noise or masked tokens and iteratively refine the entire text into coherent output. I would poetically compare it to an artist who sketches a rough outline first and then adds details across the canvas—but as you have already seen above, the “rough outline” for both images and text is, in fact, random noise.

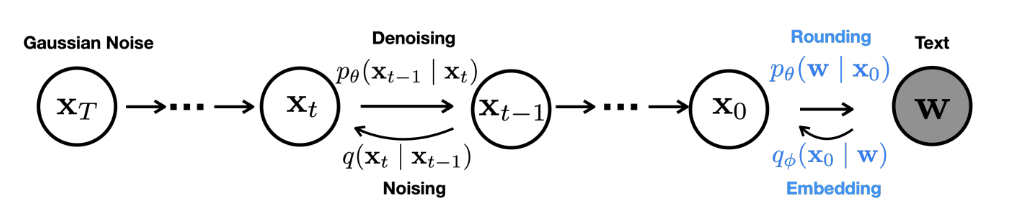

Let us consider two works in more detail. Diffusion-LM (Li et al., 2022), the first diffusion-based LLM, implemented this idea in a rather straightforward way: Gaussian noise is gradually transformed into a sequence of word embeddings, with a “rounding” step at the end to assign actual tokens. The illustration is very similar to standard diffusion models:

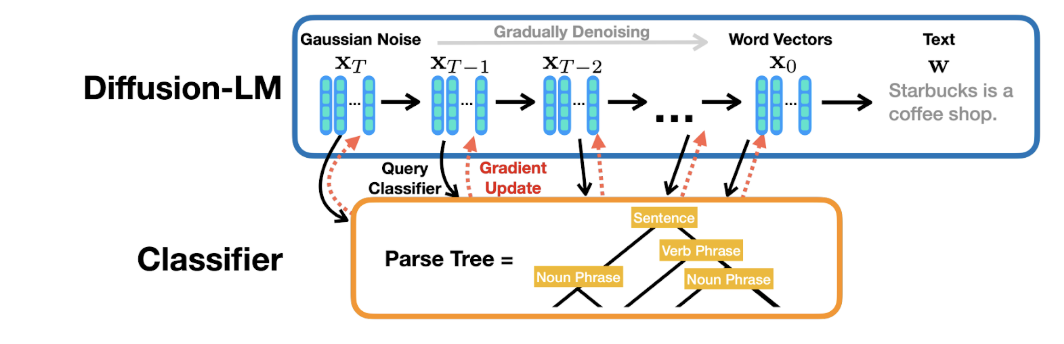

The interesting part here is that the gradual denoising can be quite successfully controlled, with external conditions—such as a specific sentiment for text or its grammatical structure—guiding the denoising process with gradient-based updates:

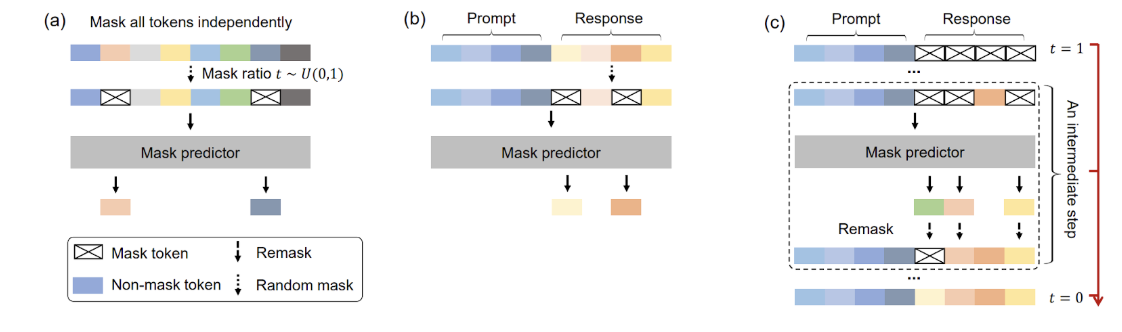

In contrast, LLaDA (Large Language Diffusion Models; Nie et al., 2025) introduces a masked diffusion model (MDM) designed specifically for text. Instead of adding noise in a continuous space, LLaDA progressively masks tokens in the forward process and then uses a mask predictor to recover them in the reverse process:

This discrete masking approach avoids the rounding issues inherent in continuous diffusion and more naturally aligns with the discrete nature of language. There are other important differences too:

- LLaDA can scale up to 8B parameters and is pretrained on trillions of tokens, and its performance shows that diffusion‐based language models can rival—and in some tasks even outperform—large autoregressive models;

- the bidirectional masked formulation supports better in-context learning and reversal reasoning, and as a result LLaDA overcomes the “reversal curse” (Berglund et al., 2023), where it is hard for standard LLMs to infer “B is A” after being trained on “A is B”.

This still sounds like a purely academic exercise, but recently a real-world example appeared too: Mercury by Inception Labs is a family of diffusion-based LLMs. Mercury models, particularly Mercury Coder, boast remarkable speed and efficiency, generating text at over 1000 tokens per second on standard NVIDIA H100 GPUs with performance rivaling standard offerings such as GPT-4o mini or DeepSeek Coder V2 Lite.

So why should businesses pay attention to dLLMs? Three important reasons.

- Parallel generation: diffusion models simultaneously refine entire blocks of text, unlike autoregressive models that are constrained to token-by-token generation. This drastically increases speed, up to 5-10x on the same hardware, making them perfect for real-time and latency-sensitive tasks such as coding assistance and interactive customer support.

- Enhanced coherence: By working on the entire sequence concurrently, diffusion models avoid the common pitfalls of “small” LLMs such as inconsistent or fragmented responses.

- Greater flexibility in control: diffusion models can allow for fine-grained, plug-and-play adjustments of outputs—such as style, sentiment, or topic—without extensive retraining. To me, gradient-based updates seem like a good middle ground between fine-tuning (usually too hard to do) and prompting (often too unreliable). This makes them especially valuable for personalized marketing, content moderation, dynamic storytelling, and other applications where you know what you want to get.

Naturally, there are still challenges before diffusion-based models are ready for prime time. Diffusion models are computationally intensive to train, requiring resources comparable to cutting-edge Transformers, so at present LLaDA is limited to 7B models and I am not sure 70B will be easy to train. Also, their outputs still have a higher chance of coming out scrambled than for “regular” LLMs. But it is a very exciting direction with a lot of potential, and I’ll be watching it closely in the future.

Mamba: An RNN-Inspired Way to Extend the Context

Another major line of research to move beyond vanilla Transformers focuses on equipping language models with more powerful memory mechanisms. Standard Transformers rely on self-attention to capture context, which functions as a kind of short-term memory but is limited to the context window. One of the main technical problems with the Transformer architecture is that self-attention has quadratic complexity with respect to the context size, making it difficult to scale. Moreover, standard Transformers do not have any way to persistently learn new information at inference time.

To address these issues, researchers have been developing memory-augmented architectures that integrate either recurrent layers (with better long-range efficiency) or explicit memory modules. In this post, we will discuss two important approaches.

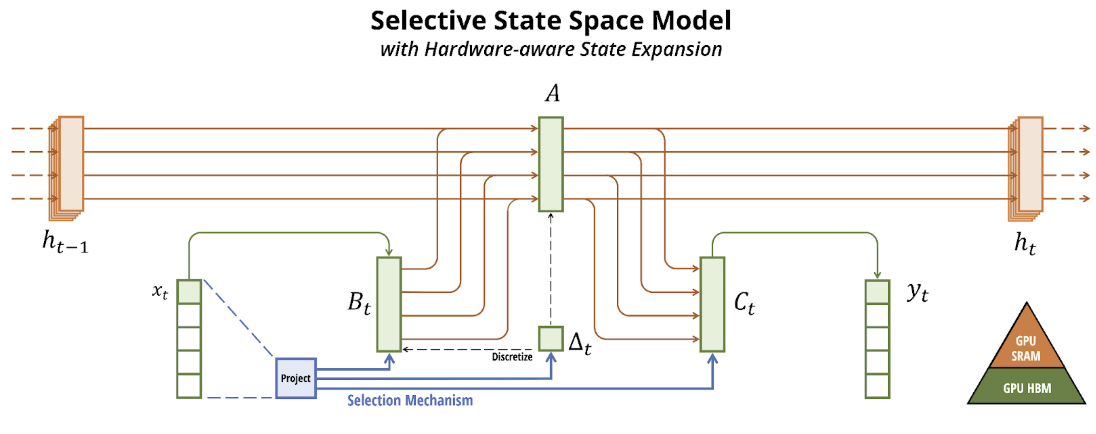

First, Structured State Space Models (SSMs; Gu et al., 2022), exemplified especially by the Mamba architecture (Gu, Dao, 2024), are a family of sequence models that have their roots in the control and signal processing literature. The idea is to preserve a hidden state that is updated recurrently for each new input, similar to an RNN. The hidden state is a compression of the past experiences, and the model tracks its evolution in time to make decisions about the future.

The full explanation would be too technical for this blog, but SSMs use continuous-time convolution kernels that can be computed very efficiently via linear algebra (FFT-based methods; see also an explanatory post by Ayonrinde, 2024):

SSMs came out of an idea known as linear Transformers (Katharopoulos et al., 2020), a clever way to use the kernel trick to avoid quadratic complexity in Transformers, which makes the resulting architecture very similar to RNNs. Gu et al. (2022) introduced S4 (Structured State Space Sequence models), which was a much faster way to compute all of the recurrent matrices and convolutions. Again, a mathematical explanation would be out of place here but for those of you mathematically inclined I can refer to the excellent explanation in “The Annotated S4”.

Finally, the Mamba architecture (Gu, Dao, 2024) was another breakthrough in that direction that allowed SSMs to finally rival Transformers in language-related tasks. The key innovation is a selection mechanism that makes the state update input-dependent, allowing the model to selectively propagate or forget information based on the current token (instead of fixed, time-invariant transition matrices in S4 and earlier models). This means that the recurrent mechanism can dynamically focus on salient tokens (important words) and compress away irrelevant information.

Mamba’s architecture is attention-free, so it eliminates the quadratic attention mechanism entirely. Instead, it uses stacked “SSM layers” as the backbone for a Transformer-sized network. Interestingly, it also discards feed-forward MLP blocks from the Transformer; in essence, Mamba replaces the standard Transformer block (self-attention + MLP) with a single recurrent state space layer. Even the default Mamba architecture can handle sequences up to 1 million tokens long, far beyond what (vanilla) Transformers can do, and Mamba runs significantly faster than a Transformer of equivalent size without sacrificing performance. This implies Mamba has similar scaling laws as Transformers in terms of accuracy vs. model size/training compute.

As soon as Mamba appeared in December 2023, an explosion of models and applications quickly ensued. Vision Mamba (Zhu et al., 2024) became the Mamba-based counterpart to the Vision Transformer (ViT; Dosovitsky et al., 2020), and other vision applications quickly followed: U-Mamba (Ma et al., 2024) parallels U-Net, Video Vision Mamba (ViViM; Yang et al., 2024) parallels ViViT for video processing, and so on. Mamba 2 (Dao, Gu, 2024) by the same authors took the ideas even further with their state space duality framework. A comprehensive survey of Mamba by Qu et al., last updated in December 2024, lists 244 references, most of which are papers on Mamba variations and applications released in 2024.

And, of course, no post on AI would be complete without some very recent news. On March 21, Tencent joined the chorus of reasoning models with their Hunyuan-T1 (blog post, repository). Everyone has a reasoning model these days, but what is interesting here is that Hunyuan-T1 is a “hybrid Transformer-Mamba mixture-of-experts” architecture. There is no detailed paper yet, but I would assume that some of the experts in the mix are Transformer-based, and some are Mamba-based, which allows the model to draw on Mamba’s extra-long context abilities when necessary and on the Transformer’s detailed “all-to-all” attention mechanisms in other cases. It is still early to say, but this may be the step that will put Mamba on the map of frontier LLMs.

Titans: Explicit Memory in Test Time

An orthogonal but related approach to making LLMs more capable of long-term reasoning is to augment Transformers with explicit memory components. Instead of replacing the entire architecture (as Mamba does), these models are still Transformers but with access to RNN-like memory mechanisms. The rationale comes from human cognition: we have distinct memory systems (short-term working memory vs. long-term memory) and we actively write important information into long-term storage for later retrieval. Transformers, by contrast, only have short-term memory via attention and whatever is encoded in their fixed weights from training. So why don’t we extend a Transformer with explicit memory that will be managed by the Transformer itself, like an LSTM cell manages its hidden state?

Many such ideas have been proposed—e.g., the Memory Transformer (Burtsev et al., 2020) and Recurrent Memory Transformer (Bulatov et al., 2022) were early attempts with very simple implementations that still worked well. But here, I want to highlight Titans, an architecture recently proposed by Google Research (Behrouz et al., 2025, submitted to arXIv on New Year’s Eve, on December 31, 2024).

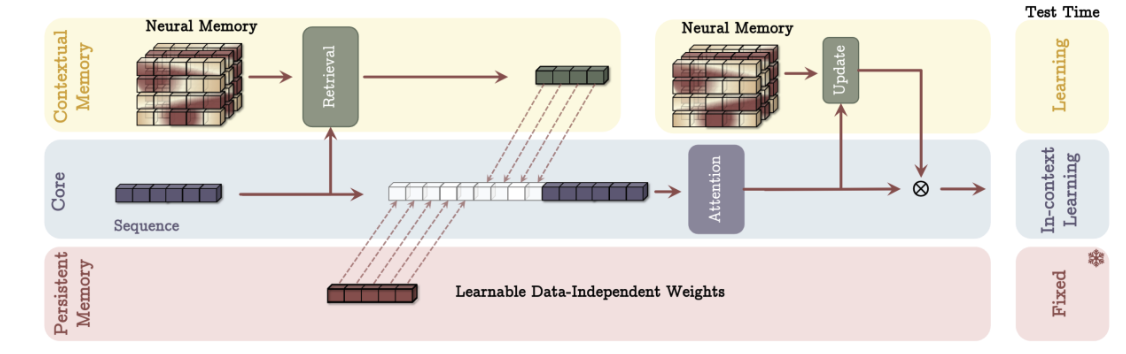

Titans combine traditional Transformer attention blocks, serving as a fast short-term memory for the current context window, with a new neural long-term memory (LTM) module that can store information beyond the current window. The model is organized into three components:

- the core module is basically a standard Transformer or other sequence encoder that processes the current segment of tokens (e.g., the last 1024 tokens);

- the long-term memory module is a differentiable memory mechanism that can read/write information from earlier segments or even from previous tasks;

- finally, persistent memory includes static learned weights of the model, an implicit memory of training data; in Titans, it is implemented as learnable embeddings that are appended to every sequence.

The three components interact as follows (this is only one of the several ways that Behrouz et al. compare in the paper, but it will do for an illustration):

As the model goes through text, it identifies “surprising” or important information that is not already known from its weights or current memory, and stores those bits into the long-term memory module. This is achieved by a learned function that takes in the sequence representation and produces memory slots (vectors) to cache. The memory module also has a mechanism to forget less useful info.

The most interesting part is that during inference, this memory component is active and learns to memorize on the fly. This means that Titans are implementing a kind of online gradient descent with momentum that rewrites memory to store the most surprising info as the architecture reads a text sequence. As a result, regular self-attention acts as a high-fidelity short-term memory, and the neural memory provides compressed storage that captures long-range dependencies at lower cost. This addresses the “compression dilemma” of linear attention models that try to encode long contexts into a single state and inevitably lose detail.

Behrouz et al. show that even their basic Titans architecture scales up to inputs over 2 million tokens in needle-in-a-haystack tasks. But most importantly, unlike Transformers, Titans can learn new facts at test time. For example, a Titan model reading a long novel could “remember” early plot points in its memory, effectively extending its knowledge dynamically without gradient updates for the model weights. This is a very interesting and significant difference with the (relatively more static) Transformers.

There are open questions remaining, of course: the memory writing policy could be optimized further, it is not trivial how to balance forgetting vs. remembering, and the test-time learning architecture may lead to implementation issues. Still, I believe that the promise of memory-augmented models is significant, and Titans are an important step towards a more principled long-term memory module. If (when) these architectures mature, we could see LLMs capable of reading and reasoning over book-length texts or years of documentation, with true long-term coherence.

Conclusion

The AI landscape may be entering a transition period. While Transformers will continue to dominate the near-term horizon—they're battle-tested, scalable, and extremely capable—the architectural innovations we have explored in this post may be the first serious contenders to the Transformer's throne.

Each addresses fundamental limitations of the current paradigm: diffusion models tackle generation speed and controllability, Mamba addresses context length scalability, and Titans offer a path toward persistent memory and test-time adaptation. In essence, Mamba and Titans are different approaches to adding memory to Transformers, as illustrated below:

Together, they paint a picture of future AI systems that are faster, more efficient, and capable of much deeper reasoning across longer contexts.

For businesses, these advances will translate into tangible benefits: reduced latency for customer-facing applications, lower inference costs, and AI assistants that can reason across entire codebases or company knowledge bases. The future beyond Transformers is not just an academic curiosity—it is where tomorrow's competitive advantages are being built today. We do not know yet if these specific architectures will dethrone the Transformer or if they are mere stepping stones to even better ideas, but it seems that there are still more advances to be made, and first movers to these advances will definitely have an advantage.

Are you ready?

.svg)