As AI evolves from large language models to more powerful reasoning models, computational and security demands on enterprises keep rising. The next leap in AI—what some call “near-AGI”—will require robust, always-on infrastructure that can scale on demand, comply with strict regulations, and safeguard sensitive data. Apolo addresses these critical needs with a fully integrated, on-prem solution designed in partnership with data centers. Combining hardware readiness, advanced software tooling, and best-in-class security, Apolo simplifies everything from model distillation to inference at scale. By adopting Apolo, organizations can embrace the cutting edge of AI capabilities—without compromising control, privacy, or compliance.

The Coming AI Disruption – Are You Ready?

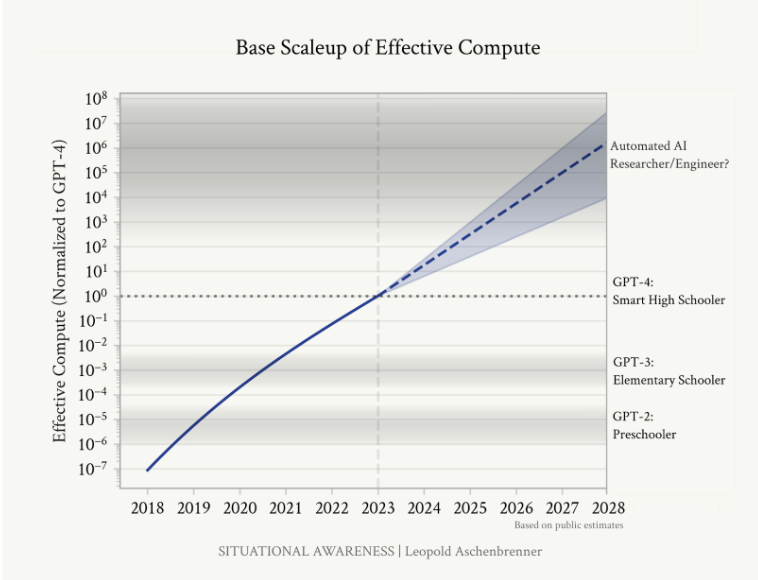

When Will We Get AGI? You don’t have to fully “believe in AGI” to understand what’s coming next; just follow the trendlines. In the span of three short years, we jumped from GPT-2 to GPT-4—a 100,000x increase in computational resources. Some experts, like Leopold Aschenbrenner, posit that practical AGI could appear by 2027:

Other, more skeptical experts place AGI in the 2030–2040 window, with the current median prediction by professional forecasters being around 2030. Wherever you land on that timeline, one thing is clear: AI will be everywhere, in everything, transforming entire industries from the ground up.

Right now, the conversation is dominated by who will win the “race to AGI”—OpenAI, Google DeepMind, Anthropic, Mistral, xAI. But that’s the wrong question. There is no moat. Open-source and/or open-weight AI is moving very fast, breakthroughs spread too quickly, and the cycle of improvement is too relentless for any one player to maintain an enduring lead.

The Rise of Reasoning Models. You have likely heard that a Chinese AI company DeepSeek released its R1 reasoning model—in essence, a replication of OpenAI’s o1 series but at a fraction of the cost and available with open weights. The figure of $5.5M for training the DeepSeek-V3 model gets thrown around a lot. Still, it is, of course, misleading: it does not take into account any previous training runs (and we know that the DeepSeek team built their own cluster—that was orders of magnitude more expensive than $5M) or the team compensation (top AI researchers don’t come cheap).

Still, now that this is done, anyone can download the R1 model weights and run it themselves, locally, on hardware amounting to under $10K. With a bit more hardware, you can fine-tune available open models for your own tasks and/or datasets—this does not require anything close to the hardware needed to train a model from scratch.

However, there is one huge novelty that recent reasoning models such as R1 and o1 bring: they get smarter with more tokens on inference. This means that even though only leading labs really need enough hardware to train a foundational model, any company not willing to send all their data to OpenAI or DeepSeek will need quite a lot of computational resources even to run it.

So, what does all this imply for businesses trying to adopt AI now? All of the above leads to two conclusions:

- the AI landscape will remain highly competitive, with no single winner;

- the challenge is not just training better foundational models—it is just as much about deploying them at scale, securely, and in a way that gives businesses real control and sufficient computational resources.

This is where most companies will fail, and this is where Apolo can help you. Below, we will discuss this thesis in more detail. We will start from the latest news on reasoning models, then explain why frontier AI labs cannot be the only solution for the ongoing AI revolution, and finally introduce the vision and reasoning behind Apolo.

Reasoning Models: A New Scaling Law

From LLM to Reasoning Models. First, let us dive into the computational needs of modern AI.

For a long time, the discussion in this regard had been dominated by model training—massive clusters of GPUs and TPUs crunching data, fine-tuning weights, and pushing the limits of compute efficiency. After a frontier model has been trained, there still remains the problem of serving it to the customers, and, of course, if you are OpenAI aiming

for hundreds of millions of users this also requires a significant investment. But for a “regular-sized” company that needs to service hundreds or thousands of employees and/or clients, hosting and serving a fine-tuned LLM would not be that much of a burden, it could be done with several dozen GPUs rented on Amazon or put into a preexisting small datacenter.

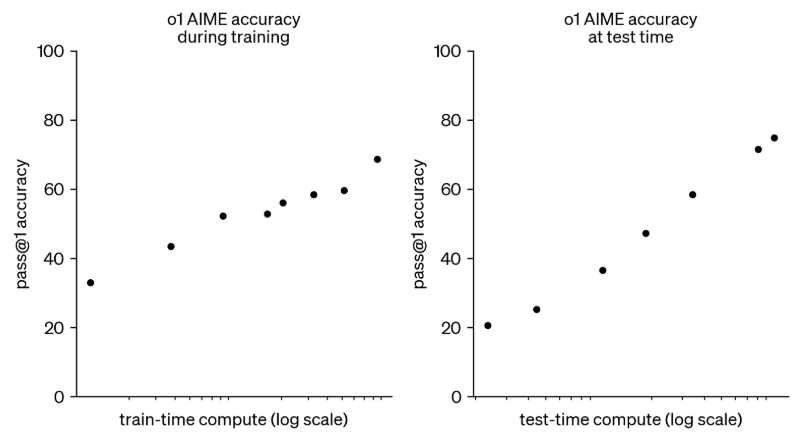

Large reasoning models (LRMs) such as OpenAI’s o1 and o3 or DeepSeek’s R1 are changing this landscape as we speak. Here is the famous plot from the very announcement of o1-preview back in September 2024:

Starting from o1-preview, LLMs have learned to think over a problem, ponder, spend inference time resources, and generate intermediate tokens that are actually helpful. Note the logarithmic scale on the X-axis of the plot above: often, you’d need way more tokens. Six months is an eternity in AI years, but it is still the most significant development on the AI scene. OpenAI’s o1 and now DeepSeek R1 have unlocked a new scaling law.

Distillation: From Strong Models to Your Own. Moreover, DeepSeek and other o1 replications have already shown how to achieve similar performance at a fraction of the training costs. I’m not just talking about the $5.5M figure for training DeepSeek-V3—how about a reasoning model called s1 that several Stanford researchers have obtained by pure distillation on a mere 1000 examples, for a total training cost of about $50? It cannot outperform o1 and R1, of course, but it’s close enough to show that once someone, somewhere has achieved a new performance level, closing in on this level is much easier.

But even if you can distill a reasoning model for your company for a few hundred bucks, you still need somewhere to run it. Therefore, the next battle isn't just about training—it's about inference at scale. The AI giants already know this: running LLMs at production levels is an infrastructure challenge, and demand for test-time compute is exploding.

To sum up, by now, there are several scaling laws (the original OpenAI scaling laws and Chinchilla are still valid!), each adding to the amount of compute companies need right now or will need very soon:

- you can scale up the model size, which increases computational requirements for training;

- you can collect more data, which increases both compute and storage needed for training;

- as usual, you can scale up how much you can do per unit of time by parallelizing both training and (especially) inference;

- and finally, as per you can scale up how many tokens you use per query, improving the reasoning capabilities of your model at the cost of additional compute per query.

So, the requirements for compute are still going up, and there is no end in sight. However, new AI adopters will need more than just pure computational power. And let’s face it—who wants to trust a potentially game-changing AI model with all their proprietary data, anyway?

The AI Infrastructure Challenge

Today, AI is no longer just about impressive demos or proof-of-concept pilots; it’s about operational, always-on systems that integrate with critical business workflows. But the path to getting there isn’t straightforward, especially considering the following.

The Risk Factor: AI Is Getting Too Damn Powerful. With the breakthroughs in reasoning models we have considered above, AI systems are now capable of sophisticated problem-solving, pattern detection, and decision-making—often exceeding the average human skill level in specific tasks. This raises concerns in several areas:

- data confidentiality: if you’re an insurance company, sharing proprietary actuarial tables with a powerful external model might feel like handing over the keys to your kingdom; it sounds like it’s a question of months before AI tools are widely available to everyone, and the real competitive moat in tomorrow’s business landscape will hinge on how securely you manage and deploy your AI tools;

- competitive edge in the models themselves: we have discussed that additional fine-tuning can be relatively easy to do; an AI model fine-tuned or distilled with your data can “understand” your business very well, but it can be a double-edged sword; used wisely, it’s a force multiplier, but if it’s not contained or is accessible to competitors, you risk losing your unique advantage.

Whether in the form of raw data or trained models, the only moat a company might have in a world like this is protecting their information, and this is where data centers can make the most important contributions. In the AI-rich world of tomorrow, control over your data and the inputs and outputs of your AI models will be more important than profit margins. The entire AI economy will depend on secure, private AI deployment.

Regulatory and Compliance Pressures. Companies in many sectors, including finance, healthcare, defense, and others, operate under strict regulatory frameworks. Many of these frameworks prohibit data from leaving on-prem servers or crossing certain borders. In particular:

- cloud-based models are often restricted: many compliance rules forbid uploading sensitive data to a third-party cloud; in this case, even if a company wants to harness the power of large-scale model providers, it may be legally required to host sensitive workloads internally;

- auditability and traceability requirements: as AI models grow more capable and their outputs become more consequential, regulators increasingly demand a paper trail, and also, a company relying on AI models will need infrastructure to log data usage, ensure transparency, and meet new auditing standards (e.g., the EU AI Act that was recently passed in the European Union).

The Staggering Rate of AI Evolution. AI capabilities are improving faster than almost any other technology in modern history. A system that was state-of-the-art six months ago often cannot hold a candle to today’s solutions. Models like GPT-4o, o3, or R1 are sure to be outperformed by next-gen systems that will take reasoning capabilities, advanced fine-tuning techniques, or specialized domain knowledge to new heights. This progress may stop or run into physical limits—no exponential can go on forever—but it does not seem likely to happen in the near future. We are in for at least a few more years of rapid expansion of capabilities.

Note that progress has two sides: while top-of-the-line models may keep becoming larger and more expensive, algorithmic and hardware advances mean you can get the same performance for less. Training, inference, and hardware costs can fluctuate wildly, and results that are bleeding-edge and very expensive this quarter may become mainstream and 100x cheaper in the next, as new hardware or model architectures appear.

The usual scaling challenges also remain. Scaling your AI model from 10 to 10,000 queries per second might force a massive shift in your hardware design, network architecture, and budgeting. Being unprepared can mean massive unplanned spending or, worse, downtime.

Can You Actually Trust Large Model Providers? We all rely on major AI labs to push the envelope; I personally use OpenAI’s models all the time and trust them with a lot of personal information. But placing the entire future of your company in their hands raises questions:

- legal issues: we have already discussed that it may be downright illegal to do;

- strategic dependence: if OpenAI changes its pricing model or usage policies, or if DeepSeek decides to restrict open-weight releases, what will you do?

- security risks: exposing your data to external APIs—no matter how robust the provider’s security—still means you are sending it outside your direct control; potential data breaches, insider threats, or even government interventions are not beyond the realm of possibilities;

- actual trust: apart from legal issues or third-party attackers, do you really trust large AI labs, e.g., not to train on your data? And if they do, what if the next iteration of their models does your job better than yourself and puts you out of business?

Therefore, most enterprise customers and service providers still have a long way to go before they are ready for the tidal wave of change that contemporary AI models can bring. This is where Apolo can help.

Introducing Apolo

Apolo’s Vision. Apolo is the perfect solution for this infrastructure challenge. It is a collection of tools that ensure the private and secure deployment of AI models for data centers and individual businesses—a comprehensive ecosystem designed for the age of Enterprise-Coordinating Artificial General Intelligence (ECAGI). Apolo will ensure compliance with regulatory bodies and future-proof your business.

Today’s AI models aren’t just “smart assistants” anymore. With the rise of reasoning models like R1 and o1, we stand on the threshold of true general-purpose intelligence—the kind that can reshape whole industries. Apolo is built specifically for this new world, creating a secure environment where organizations can deploy and coordinate advanced AI agents that understand their data, workflows, and objectives. Apolo includes:

- a hybrid software-hardware approach: Apolo isn’t just another software stack, we are collaborating with data centers to integrate with them and ensure that all on-prem hardware meets the security and performance standards needed for tomorrow’s AGI demands;

- interoperability: our tooling connects seamlessly with open-source frontier models and smaller distilled agents, giving customers maximum flexibility while still being efficient for on-prem data center hardware;

- security at every layer: from encryption protocols to role-based access controls, Apolo includes cybersecurity directly into its architecture; we have already discussed how crucial security will be in the age where your data is your largest competitive edge, and Apolo is designed from the ground up with security in mind.

Datacenters are Key in the Next Level of the AI Revolution. We believe that in the race to harness new AI capabilities, data centers will play a bigger role than ever before—possibly overshadowing cloud hyperscalers and even big-name AI labs. Here is why:

- data sovereignty and compliance: legal frameworks begin to tighten up (just read any discussion of the EU AI Act to get a feeling for it), and many industries cannot push sensitive data into third-party clouds; Apolo turns this into an opportunity for data centers that can offer a secure on-prem environment;

- ECAGI as a strategic asset: the AI progress that we have discussed above shows how even simple distillation can bring state-of-the-art models to your own business, so instead of relying on black-box external models accessible via third-party interfaces or APIs, companies can fine-tune and run their own industrial-strength models right inside the data center;

- future-proofing and easy expansion: the AI landscape is evolving extremely fast, and some models will get bigger, others smaller and cheaper, new paradigms will emerge, and scaling may be required unexpectedly. Apolo is designed for agility in the face of this change: a company’s hardware needs may shift abruptly, and Apolo can scale elastically when usage jumps from 10 queries per second to 10,000 or back. This is an important argument for companies to use on-prem solutions at specialized data centers, where such scaling is frictionless, instead of building their own clusters.

ECAGI will make data centers colocation Apolo and ECAGI environment the most valuable asset of any company. Apolo is preparing an environment to start receiving ECAGI in 2 years or less. Corporations cannot do that themselves, they are missing key pieces.

Within a year or two, every data center will become, by necessity, an AI data center—and with Apolo, you can transform your facility into the most valuable asset for any organization preparing for the new age of AI. Apolo ties it together, offering you a transparent, compliant, and future-proof path toward ECAGI deployment. Data centers and enterprises can stand at the forefront of the AI revolution, and Apolo will be the driving force behind them.

.svg)