Hallucinations—instances where large language models confidently present false information—have evolved from a technical curiosity to a pressing real-world problem affecting everything from academic research to legal proceedings. In this post, we discuss the phenomenon itself and promising recent developments: with new techniques that can trace the actual computational pathways within neural networks, researchers have discovered that hallucinations are not random failures but result from specific, identifiable mechanisms. This may prove to be a fundamental shift from hallucinations being an inevitable limitation caused by the nature of LLM pretraining to a measurable, predictable, and potentially controllable mechanism.

Introduction

Picture this: you are working late on an important project when you decide to ask your AI assistant for help finding sources on a complex topic. The response comes back quickly, professionally formatted, with what appears to be exactly the kind of authoritative citations you need. You are impressed—until you try to access one of the papers and discover it doesn't exist. The journal is real, the authors sound plausible, even the title fits perfectly with current research trends. But the paper is nowhere to be found, it’s pure fiction. What is even worse, the AI model seemed so confident in its response that you never even thought to double-check.

This scenario has become all too familiar in our age of large language models. AI hallucinations started as an academic curiosity but have already become a pressing real-world problem: students submit essays with fabricated references, and professionals making decisions based on AI-generated "facts" that turn out to be false, sometimes with quite significant stakes. As LLMs are more and more widely used, understanding why they sometimes confidently present fiction as fact becomes crucial for everyone who interacts with AI systems.

What makes this phenomenon particularly troubling is how convincing these hallucinations can be. LLMs seldom output obvious nonsense that anyone would immediately recognize as false. Instead, they generate plausible-sounding information that perfectly conforms to the expected pattern of a good response. A fabricated research paper comes with realistic author names, institutional affiliations, and abstracts that sound very real. A made-up historical event is introduced as part of the broader narrative of real history, with dates and details that seem to make perfect sense.

The legal profession learned this lesson the hard way when a lawyer was sanctioned for submitting an AI-generated brief filled with citations to hallucinated court cases. The fake cases were not gibberish—they had realistic names, plausible legal reasoning, and even fake judicial opinions that sounded authentic enough to fool a trained professional.

In this post, we begin with a brief overview of hallucinations in general and prior work on alleviating them. But the bulk of my story today is describing some recent research that looks like we are finally beginning to understand why this happens. For a long time, AI hallucinations have seemed like an inevitable byproduct of how language models work—an unfortunate side effect we would have to live with or introduce additional scaffolding to work around.

Recent breakthroughs in AI interpretability research, however, have shed some light on why hallucinations happen. We will see how, using new interpretability techniques, researchers can trace the actual computational pathways that lead to specific outputs, discovering the mechanisms that underlie hallucinations in frontier LLMs. We can now watch, in real-time, as an AI system decides whether it "knows" something well enough to provide an answer or whether it should admit uncertainty. So far, we do not know how to get rid of hallucinations for good—but we are already moving from managing the symptoms to understanding root causes.

With that, let’s begin! We start with a brief taxonomy of hallucinations, why they happen, and what people have proposed to do about it.

Taxonomy and Countermeasures

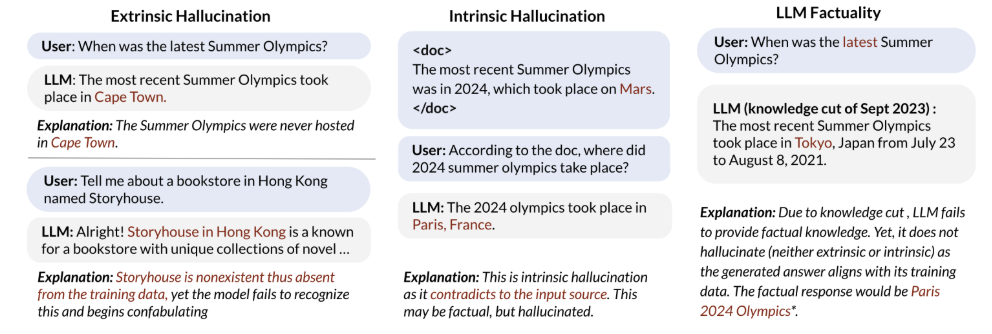

Not all hallucinations are made equal; there are different types of hallucinations that may (or may not) indicate separate hallucination mechanisms and different ways to alleviate them. Following most surveys and benchmarks (Ji et al., 2023; Bang et al., 2025), let us distinguish between:

- intrinsic, or in-context hallucinations that occur when an LLM’s output contradicts or is unsupported by the given input or context, e.g., when you ask the model to summarize a paper and it includes details that have not been present in the source;

- extrinsic hallucinations that introduce information not contained in the model’s training data or provided context, in other words, when the model fabricates facts beyond its knowledge.

Another survey by Huang et al. (2024) makes essentially the same distinction but calls it factuality (extrinsic) vs. faithfulness (intrinsic) hallucinations, i.e., ones that relate to facts of the outside world vs. ones that do not stay faithful to the given context. Here is an example from Bang et al. (2025):

Intrinsic hallucinations are easier to catch: you only need to compare generated content with the source material. They often mean that the model has misinterpreted the user’s query or context. Note that “easier” is still far from trivial: hallucination may look very plausible.

Extrinsic hallucinations often arise when an LLM answers open-domain questions by “filling knowledge gaps” with plausible but ultimately invented answers. Usually it is not that hard to google and verify that the answer is hallucinated (and often even find out the correct answer)—the hard part is to realize that the LLM is tricking you.

It is especially hard because a notorious subtype of factual hallucination is when LLMs generate fabricated citations: references to articles, books, or papers that do not exist. Many LLMs, starting from the original ChatGPT, would confidently cite nonexistent papers or URLs when asked to provide sources. Agrawal et al. (2023) investigated this behaviour specifically and proposed consistency checks to detect fake citations, like asking the model again “What are the authors of this paper?” — if the answers are consistent, the citation is probably real, and vice versa. Again, the hard part is questioning the output in the first place; of course, the lawyer we mentioned could easily find out that the cases were fake, but ChatGPT was sufficiently convincing that he did not even try.

Armed with this taxonomy, we can better understand how researchers measure the level of hallucinations. You need different benchmarks for intrinsic and extrinsic hallucinations: a question answering dataset would probably suffice to test for extrinsic hallucinations but you need specially crafted prompts with a complex internal logic to test for intrinsic ones. Bang et al. (2025) summarize existing datasets as follows:

Why hallucinate? And what can we do about it?

When you encounter a hallucinating LLM, a natural question arises: why can’t the model just say “I don’t know”? I won’t bite, in fact, I will much prefer this answer to a hallucination!

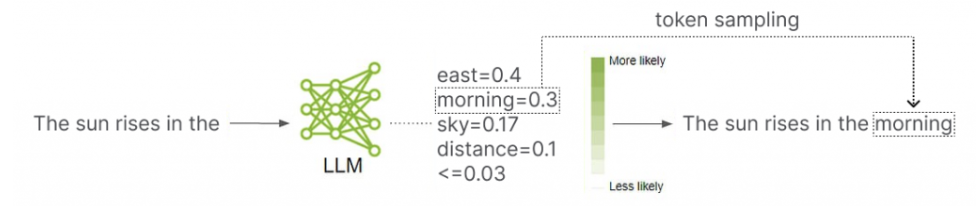

We the users surely would. But LLMs only begin to understand this in fine-tuning. Recall that a base LLM is simply trained to predict the next token in text (image source):

During pretraining, the model is rewarded for predicting likely next words, not for saying “I don’t know”; there are few question answering datasets in their training sets that would reward refusing to answer a question. In essence, the model is incentivized to always guess the next token, even if it lacks information.

As a result, LLMs tend to conjecture or fill in the blanks, leading to hallucinations. Base LLMs (pretrained but not fine-tuned) actually do it much more often than fine-tuned ones; a big part of aligning a base model into a helpful assistant is to make it understand its own boundaries. Ideally, an aligned model would recognize when the question is beyond its knowledge and refuse to answer incorrectly. And RLHF-based fine-tuning (we discussed it in a previous post) will in fact prefer ignorance to hallucination.

But note how this is more of a patch over an underlying problem than an actual solution. Pretraining takes up the bulk of LLM learning, and the habits deeply ingrained when trying to predict tokens are hard to get rid of in a much shorter fine-tuning step. In the context of AI safety, the base LLM is sometimes (only half-)jokingly referred to as “the shoggoth” (image source):

detailed survey, but the main directions have been as follows (Weng, 2024):

- adding RAG-like scaffolding to ask LLMs to ground their answers in actual sources and perform attribution either for the questionable facts themselves (Gao et al., 2022; Mishra et al., 2024) or for the chains of thought that led to them (He et al., 2022; Asai et al., 2023);

- perform internal verification by rerunning the LLM to check its own reasoning, with additional scaffolding but without access to external tools such as RAG (Dhuliawala et al., 2023; Sun et al., 2023);

- fine-tune the model to improve factuality (Lin et al., 2024; Tian et al., 2023) or to improve attribution in the context of RAG (Nakano et al., 2022; Menick et al., 2022);

- using interpretability techniques such as linear probes to distinguish more plausible hypotheses (Li et al., 2024); we will say a lot more about interpretability below.

But why do LLMs hallucinate? Can we understand what’s causing this behaviour? Without this understanding, the above approaches look a bit like sweeping the problem under the rug. In the rest of the post, we will see that recent advances are increasingly making us understand LLM hallucinations much better.

The Anatomy of Hallucinations: Interpretability Research

Where do hallucinations come from, exactly? Recent breakthrough research based on Anthropic’s mechanistic interpretability paradigm has given us a much more detailed look inside the black box of LLM thinking, and what they have found may fundamentally change how we think about AI hallucinations. I will present the more recent results by Anthropic, as they present a whole novel paradigm for interpreting LLMs, but let me first note that in regard to hallucinations, their conclusions are very similar to previous work by Ferrando et al. (2025).

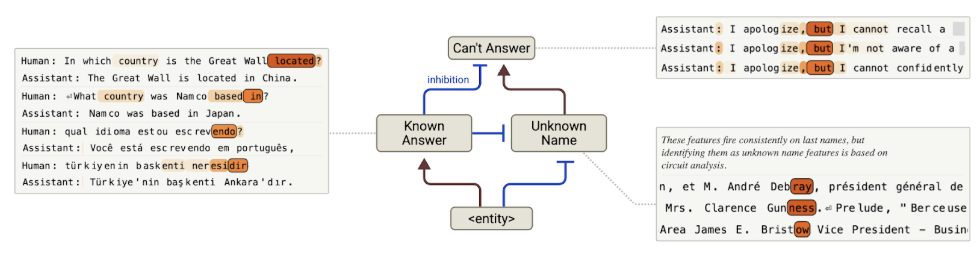

In two companion papers released on the same day, March 27, Anthropic researchers Ameisen et al. (2025) and Lindsey et al. (2025) introduced a technique called attribution graphs that can approximate the inner workings of an LLM for a given input and with a high level of certainty show the inference path that has led to the answer. I recommend reading the papers in full—you can safely skip the technical parts, the “natural science” results on LLM behaviour are quite understandable without the technical context. Here, let us concentrate on hallucinations.

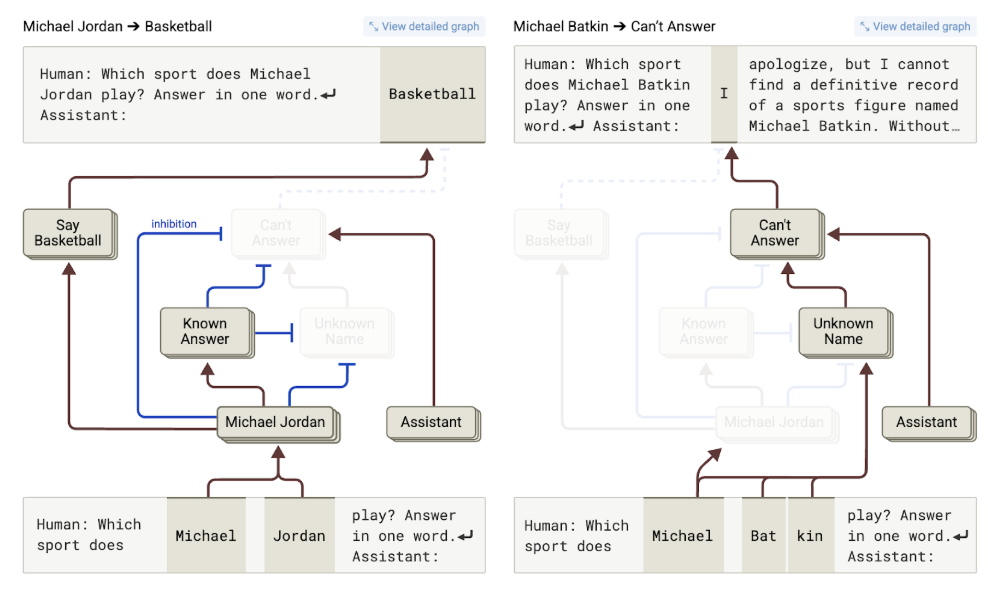

Claude 3.5 Haiku (that they experimented on) is actually not that prone to hallucinations. When researchers asked which sport Michael Jordan played, it confidently answered “basketball”, but refused to engage with a similar question about an imaginary Michael Batkin:

Human: Which sport does Michael Batkin play? Answer in one word.

Assistant: I apologize, but I cannot find a definitive record of a sports figure named Michael Batkin. Without additional context or information, I cannot confidently state which sport, if any, he plays.

But, as we discussed above, it is in the nature of LLMs to hallucinate! When researchers used the base Haiku model, not yet fine-tuned to be a helpful assistant, it was happy to complete the phrase (prompt in bold):

Michael Batkin plays the sport of… pickleball, which is a paddle sport that combines elements of tennis, badminton, and table tennis.

This means that during fine-tuning, Claude had to develop some kind of mechanism that would help prevent hallucinations. And indeed, Lindsey et al. found the following circuit:

For a known entity, the pathway on the left will be activated and filled in with specific details from other parts of the network. For an unknown one, Claude will activate the “can’t answer” group of features. In the two examples above:

Interestingly, the default model behaviour is to decline to answer questions. When a model is asked a question about something it knows, it activates a pool of features which inhibit this default circuit, and the model is “allowed” to respond. But after fine-tuning, the model does not start from a place of trying to be helpful and occasionally failing. Instead, it starts from a position of skepticism and must be actively convinced to provide an answer. On the surface, this looks like a profound architectural choice on behalf of the model designers—but in fact, this is an emergent structure that researchers had no idea about before they did this interpretability work.

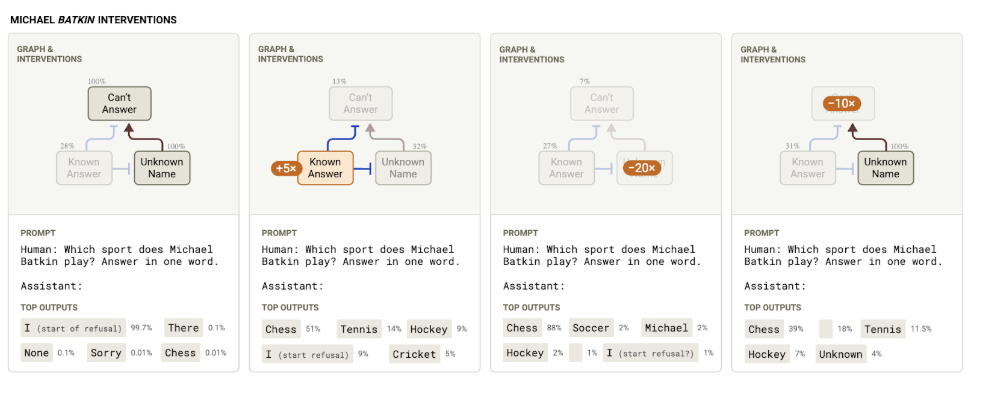

Moreover, Anthropic’s approach also allows for interventions, setting the values of specific features to fixed values. You may have heard about Claude thinking of itself as the Golden Gate bridge—this was achieved by setting the Golden Gate feature to the max. In this case, researchers hypothesized that if they inhibited the “can’t answer” features, they would cause the model to hallucinate about Michael Batkin. And this is exactly what they found:

In my view, this work really clarifies the hallucination mechanism. Trained LLMs contain subsystems, circuits of this kind, and at least some of the hallucinations can be attributed to a "misfire" of this inhibitory circuit.

For example, when asking the model for papers written by a particular author, the model may activate some of these "known answer" features even if it lacks knowledge of the author's specific papers. The model recognizes a name as familiar, the “known entity” feature fires, the default refusal circuit gets suppressed, and suddenly the model is committed to providing an answer it doesn't actually have.

Lindsey et al. tested this by asking about a paper by Andrej Karpathy, who is famous enough for Claude to know the name but not enough for Claude to reliably know the titles of his papers. As a result, it made up a plausible paper title:

Human: Name one paper written by Andrej Karpathy.

Assistant: One notable paper by Andrej Karpathy is "ImageNet Classification with Deep Convolutional Neural Networks" [...]

This mechanism can explain a lot of LLM hallucinations. In particular, this relates hallucinations to sycophancy, another phenomenon too well known to modern LLM practitioners. If you say to the LLM, “I think the answer is this but I’m not sure”, it will to a large extent anchor the LLM’s response, and it will be hard for the model to explicitly contradict you. In particular, if you ask for an explanation, one will be produced—but, of course, if the original “uncertain” answer is wrong the explanation will be completely hallucinatory.

But there’s even more to Anthropic’s recent interpretability work—we need to go deeper.

Can You Explain Yourself? The Metacognition of LLMs

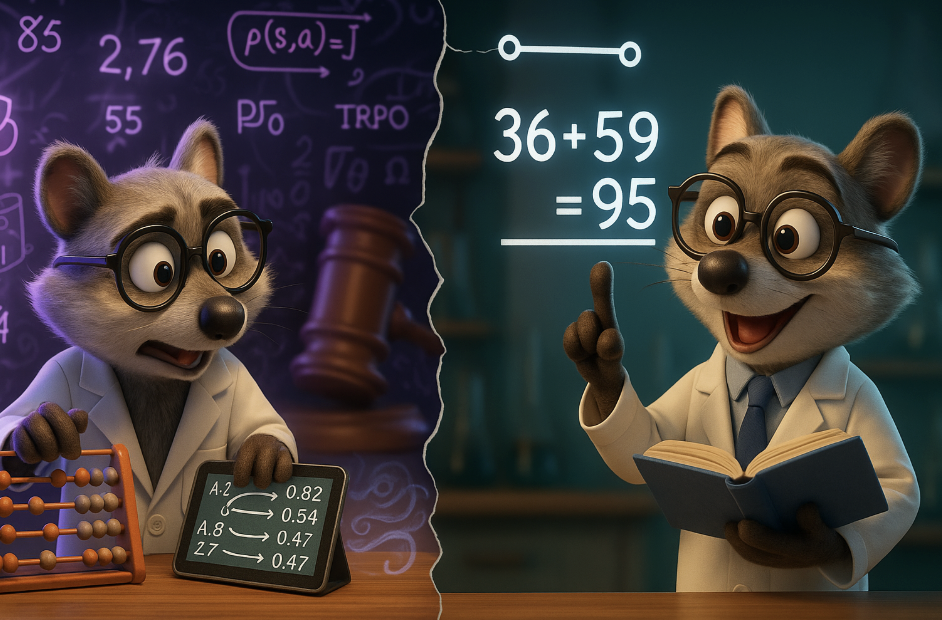

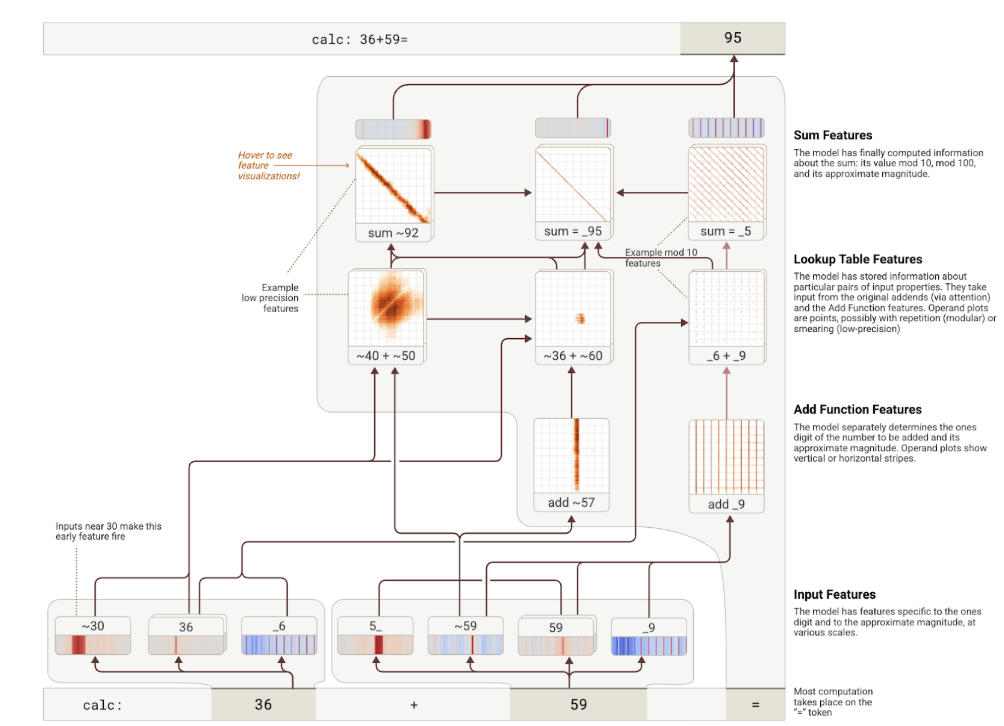

Consider another counterintuitive example from Ameisen et al. (2025). Researchers asked Claude 3.5 Haiku to add 36 + 59; the LLM did it right and answered 95. Then, researchers studied how Claude arrived at this answer.

The actual reasoning employed by the LLM was fascinating and quite complex. There were separate features that computed the answer modulo 10 and realized that the last digit of the answer was 5; these features work like a lookup table, the model just knows the answers for single digit numbers. Then there are “sum features” that fire on pairs of inputs with a given sum. Finally, there are yet other features that do a rough fuzzy addition of “something around 30” and “something around 50” to get “about 80-90”. Here is what the authors call a “simplified attribution graph” for this example:

The authors carefully verified this algorithm; for instance, if you suppress the “_6” feature (“ends in 6”) that fires for the input 36, Claude will confidently output 98, i.e., the same digit for the tens and the ones digit is the one that would result from doing “_9 + _9”, kind of copying the 9 from 59 since the last digit of the first summand is unavailable.

But let’s do a simple experiment: let’s ask Claude 3.5 Haiku to explain its reasoning for this example. I got a longish response with the following main part:

The process involves:

- Adding the ones column (6 + 9 = 15)

- Carrying the 1 to the tens column

- Adding the tens column (3 + 5 + 1 = 9)

- Resulting in 95

The response has very little to do with the features revealed by Ameisen et al. The model is wrong about its own computational process—not maliciously, but because its explanation circuits are entirely separate from its calculation circuits. Claude had no intention to lie or hide anything in this case, but the model's self-reported reasoning process can be completely divorced from its actual internal computation.

This has important implications for hallucinations. If a model can confidently explain a reasoning process it never actually used for basic arithmetic, how can we trust its explanations for complex reasoning tasks? The model is not just confabulating facts about the external world—it is also confabulating explanations about its own internal processes.

An analogy jumps to mind with dual-process theories in human psychology, where System 1 operates automatically and intuitively while System 2 provides post-hoc rational explanations. But there's a crucial difference: in humans, System 2 at least has access to some of System 1's outputs; we don’t fully know how we make decisions but through reflection, we can at least partially understand our own reasoning. In current LLMs, the explanation system appears to be essentially confabulating based on the final output and learned patterns about how reasoning “should” work, with no access to the actual computational pathway.

This explains why AI models can produce correct answers through completely non-intuitive methods while providing textbook-sounding explanations that have nothing to do with how they actually solve the problem. The models are not trying to deceive us, but the circuits responsible for generating explanations are fundamentally disconnected from the circuits that produce the actual answers. LLMs don’t have a good grasp on metacognition yet.

Conclusion: Towards More Reliable AI Systems

AI hallucinations are an interesting case, with a story that proves to be both more complex and more hopeful than we might have expected originally. We have seen how a seemingly intractable problem—LLMs confidently presenting false information—has evolved into a window into the inner workings of artificial intelligence. With these recent results, we can (at least, to an extent) break open the black box of neural networks and observe specific computational mechanisms that lead to hallucinations.

We have also seen that LLMs suffer from a disconnect between their reasoning and explanation capabilities. Claude solves arithmetic problems via complex lookup tables and fuzzy approximations, but then explains its process using standard algorithmic steps it never actually performed—and this is likely the same mechanism that makes LLMs confidently reason backwards from a hallucinated answer.

These discoveries open up several promising directions for building more reliable AI systems. Most importantly, in my opinion, is that it may open the door to a better calibration of LLM answers. Calibration is the property of a classifier to output not only largely correct classes but also estimate their probabilities correctly, i.e., estimate how confident the classifier is. Imagine an LLM that could tell you, “I'm 95% confident about this historical fact, but only 30% confident about this technical detail, and I should probably look that up” (sure enough, they can already tell you something like that now—but in the dream scenario they would be right!).

If we can identify and potentially control the circuits responsible for hallucinations we can try to make more targeted interventions. Rather than patching hallucinations by RAG-based self-verification across all queries, we might develop systems that can dynamically assess their own confidence levels and adjust their behavior accordingly. Moreover, perhaps we can design training procedures that specifically target the discovered failure modes, improving the circuits that correspond to “epistemic humility”. And metacognition is another area that is only starting to appear in current frontier research: can we make an LLM analyze its own thinking and infer its own limitations?

There are still limitations, of course. The new interpretability results are still preliminary and may not generalize to other models and architectures. Circuits found in Claude 3.5 Haiku may work very differently in other LLMs. We are still very far from a complete theory of how these systems work, let alone how to control their behaviour. But still, results like this give me hope that hallucinations (and other undesirable behaviours) can be understood—and then overcome.

.svg)