The AI landscape in 2025 feels different—not because of flashy new models, but because of something more subtle and profound. As AI agents begin writing production code, optimizing their own training loops, and discovering new algorithms, we are witnessing what may be the early stages of artificial general intelligence. But rather than a dramatic breakthrough, we are in what might be called the “plumbing phase” of AGI: the unglamorous but crucial work of integration, infrastructure, and engineering that transforms scattered capabilities into coherent intelligence. This post examines where we stand on the path to AGI, why current systems still feel brittle despite their impressive capabilities, and why the next breakthroughs might look more like better protocols than bigger models.

Introduction

The summer of 2025 feels different in Silicon Valley. This year, the key word in the AI landscape is agents, and something is indeed shifting in the air. Not because of another ChatGPT moment or a new model that breaks every benchmark—we have grown numb to those announcements. Instead, there's something quieter happening, something that feels less like marketing hype and more like a genuine phase transition.

Walk into any tech company today and you'll find AI agents quietly integrated into workflows. At Goldman Sachs, an AI named Devin sits among human developers, writing production code and participating in code reviews (TechCrunch, July 11, 2025). At Google, AlphaEvolve just discovered a matrix multiplication algorithm that beats a 56-year-old human record, then automatically implemented it in hardware (Novikov et al., June 16, 2025). Across research labs, AI systems are optimizing their own training code, shaving milliseconds off iteration times in recursive loops of self-improvement.

This isn't the AGI that science fiction promised us—no dramatic awakening, no moment of digital consciousness declaring itself to the world. Instead, we're seeing something more mundane and perhaps more profound: intelligence emerging through engineering, capability arising through infrastructure, agency developing through iteration.

When Andrej Karpathy tweeted on June 30, 2025 that “recursive self-improvement has already begun”, he wasn't being hyperbolic. He was talking about the nanoGPT benchmark where AI systems now optimize their own training code, creating feedback loops that compound over time. These aren't the explosive recursive improvements that AI safety researchers warned about, but they are not entirely benign either. They represent something new: artificial systems beginning to participate in their own development.

The question is not whether these systems are intelligent—they clearly demonstrate sophisticated capabilities across many domains. The question is whether we are witnessing the early stages of artificial general intelligence, or just pattern matching systems becoming very good at mimicking intelligence. The answer, frustratingly and fascinatingly, appears to be both.

The Yardsticks: What Would Real AGI Look Like?

The traditional binary question—"Is it AGI yet?"—has become way too simplistic to have a meaningful conversation about what’s happening now. Instead of waiting for some cinematic moment where an AI declares its sentience (ask Claude, it will tell you it’s sentient after some gentle nudging), we need more nuanced measures. Researchers broadly agree on three gradients that actually matter.

Breadth of competence. Can the system navigate between radically different domains without catastrophic failure? The real test isn't switching from chess to Go—both are well-defined games with clear rules. It's the seamless transition from debugging a memory leak in C++ to providing emotional support to a grieving friend to coordinating a surprise birthday party for someone you've never met. Current LLMs demonstrate this breadth more than any previous AI system, yet their competence remains strangely uneven. They might write beautiful poetry about loss while completely misunderstanding why you can't just 'grep' through someone's emotions to find the source of their sadness. We discussed this jagged frontier last time.

Autonomous adaptation. Do AI systems discover new problems worth solving, or do they merely optimize within sandboxes that we build for them? True intelligence doesn't just answer questions—it questions the questions. When DeepMind's agents started inventing their own mathematical conjectures to prove, that felt different from yet another benchmark victory.

Robustness under adversity. AI systems are still often too brittle to be useful. Place a human in an unfamiliar situation—a new country, a broken tool, a social faux pas—and watch them adapt, improvise, recover. Place an AI in a slightly modified environment, and watch it confidently make unrecoverable mistakes; the domain shift problem is as old as machine learning itself (see domain adaptation surveys, e.g., in Nikolenko, 2021), but there is no universal solution. This lack of robustness isn't a bug; it is a fundamental characteristic of systems that learn patterns rather than principles.

By these measures, we are seeing genuine progress on some fronts while remaining relatively static on others. In the rest of the post, let me show you what I mean.

Research Frontiers: Where Breakthroughs Might Emerge

What is going on with current research, then? How are we tracking on the way to AGI?

Self-Improving Foundation Model Methods. The ICLR 2025 workshop on "Scaling Self-Improving Foundation Models" highlights the field's most promising direction. Rather than expecting a single breakthrough, researchers are pursuing what might be called “compound interest” approaches—systems that improve by small percentages but do so continuously and autonomously.

The key insight here is that self-improvement does not require consciousness or understanding. Evolution created human intelligence through blind optimization. Perhaps artificial intelligence can bootstrap itself through similarly mechanical—but far faster—processes. Let me hold that thought for a little while because I plan to devote one of the upcoming posts entirely to self-improvement in modern AI systems.

Test-Time Compute and Reasoning. Perhaps the most significant recent development is the emergence of reasoning models that “think before they speak”—systems that started from OpenAI's o1 family and are now released across all major players in the field. These models spend computational resources on reasoning during inference rather than just scaling in training. This represents a fundamental shift from pattern matching to something closer to deliberation. Perhaps test-time compute will prove just as important as training compute for achieving reliable reasoning on novel problems.

Reinforcement Learning Meets Retrieval. The R1-Searcher (Song et al., March 2025) and ZeroSearch (Sun et al., May 2025) papers represent a paradigm shift in how AI systems interact with information. Rather than retrieving based on similarity, these systems learn retrieval strategies that maximize downstream task performance. They don't just find relevant information—they find the information that helps them think better.

This sounds like a purely technical advancement but perhaps it could unlock something profound. Human expertise often comes down to knowing where to look, what to ignore, and how to connect different pieces that don’t even look like they’ve come from the same puzzle. If AI systems can learn these meta-cognitive strategies, they might overcome current limitations in reasoning and integration.

Hardware-Aware Training Loops. Here is another underappreciated development: AI systems are beginning to optimize not just their algorithms but their physical implementations. New training loops adjust based on available hardware, finding efficient mappings between computational graphs and silicon reality. It's a co-evolution of software and hardware, guided by AI itself.

This process started in earnest in 2021, when AlphaChip (Mirhoseini et al., 2021), a system applying the ideas of AlphaZero to custom chip design, started generating superhuman chip layouts for Google TPUs. The company says that AlphaChip-designed layouts have been used in every generation of Google’s TPU since the first preprint appeared in 2020. By now, AlphaEvolve (Novikov et al., June 16, 2025), which we have already mentioned above, is combining AlphaTensor and AlphaChip. It not only improved over AlphaTensor in discovering new matrix multiplication algorithms—recall that matrix multiplication is the cornerstone of all ML computations—but also implemented the new algorithms directly in Verilog, as a circuit, and that circuit is already embedded into new TPU chip design.

Embodied Intelligence at Scale. Finally, the quiet revolution in robotics might matter more than any algorithmic advance. It has not had its “ChatGPT moment” yet, and Boston Dynamics' robot dogs released in 2020 for $74,500 apiece do not seem to be getting much cheaper. However, commodity hardware enables sophisticated manipulation, and simulation environments transfer surprisingly well to reality.

When AI agents can freely interact with the physical world—learning through trial, error, and eventual success—they gain access to the same feedback loops that drove biological intelligence. The gap between knowing and doing collapses, and theory finally meets practice at scale.

The Gaps: Why It Still Doesn't Feel Like AGI

John Pressman recently published a post titled “Why Aren't LLMs General Intelligence Yet?”. He makes several great points, and in this section I mostly expand upon his analysis. So why aren’t they?

Latent states and context. Current AI systems can only hold so much "in mind" at once. The latent state—the internal representation that carries context through computation—has hard capacity limits. To an LLM, many problems feel like trying to solve a complex puzzle while only being able to see three pieces at a time.

This shows up everywhere. If you ask an LLM to write a novel, the plot threads will probably unravel after a few chapters. Task it with analyzing a complex system, and it is very likely to lose track of interdependencies. Even with clever workarounds like retrieval-augmented generation (RAG), there seems to be a fundamental difference between retrieving information and truly integrating it into a coherent mental model.

Humans, on the other hand, build and maintain very complex mental models. A chess master doesn't just see positions; they see histories, intentions, styles, metanarratives. A programmer doesn't just write code; they maintain mental models of entire systems, their evolutionary histories, their likely failure modes. This deep, integrated understanding so far remains beyond current AI architectures.

Continual learning. Another important gap between current AI systems and true intelligence is the continual learning problem. Train a human on task A, then task B, and they know both. Train an AI on task A, then task B, and it may well forget task A—unless you carefully replay old data, balance loss functions, and perform architectural gymnastics that would not scale to true lifelong learning.

This isn't a minor technical hurdle; it's a fundamental limitation. Intelligence isn't just about solving problems—it's about accumulating solutions, building on them, transferring insights across domains. Every human expert stands on a foundation of countless learned and integrated experiences. With current AI systems, it is hard to make them stand firmly even on yesterday's training.

There are plenty of attempts to solve these two problems, in particular many different ways to add short-term, working, and long-term memory to LLMs: Mamba-style models (Gu, Dao, 2023), Google’s Titans (Behrouz et al., 2025), and many others. Perhaps we will see an operational LLM memory soon—but not yet.

Reward function design. For an AI system to be truly autonomous, it needs to generate its own goals, evaluate its own progress, and adjust its own objectives. This requires solving what researchers call the “reward ontology” problem: how does an agent decide what's worth pursuing?

Current systems need hand-crafted reward functions that inevitably lead to specification gaming. Tell an AI to maximize paperclips, and you get the paperclip apocalypse popularized by Nick Bostrom (2003). Try to specify human values precisely, and you will find that you cannot even articulate them to yourself, let alone to a machine. This is the core problem of AI alignment, a can of worms that I don’t want to open in this post—but it is equally important to agentic capabilities.

There exist approaches like curriculum learning through curiosity-driven exploration (see, e.g., the Voyager Minecraft agents developed by Wang et al., 2023). But their "curiosity" is still a human-designed function, not an emergent drive, and so far it is hard to embed intrinsic human motivation onto our AI systems.

Robustness: the difference between knowing and understanding. LLMs can meaningfully discuss quantum mechanics and molecular biology, showing very deep sophistication, yet they often miss or hallucinate crucial nuances that any graduate student would catch. They are not (usually) intentionally lying—they are just pattern-matching without “true understanding”, whatever that means.

This fragility extends beyond academic contexts. In production deployments, AI agents fail in ways that seem inexplicable to humans. They'll handle complex tasks perfectly a thousand times, then fail catastrophically on a trivial variation. They lack the robust understanding that lets humans say "Wait, that doesn't make sense" when faced with anomalous inputs. This is also a crucial aspect of truly general intelligence that we are yet to achieve in AI systems.

Conclusion: The Plumbing Phase of AGI

Here is what I think is really happening, based on the evidence: we are currently in what future historians might call the “plumbing” phase of AGI development. The spectacular breakthroughs—Transformers, reinforcement learning, multimodal architectures—are largely behind us. What remains is the unglamorous work of engineering and integration.

Consider the early Internet. All of its foundational technologies—packet switching, TCP/IP, hypertext—had been developed by the 1980s. But it took another decade of building infrastructure, defining protocols, and solving mundane problems before the Internet transformed society. We had the pieces but still needed the plumbing, i.e., the infrastructural work.

AI in 2025 feels similar. We have systems that can see, hear, read, write, code, and reason—at least within domains, sometimes with serious hallucinations, and various other problems. We have architectures that can improve themselves, algorithms that can invent algorithms, agents that can work alongside humans. The pieces are there. But they don't fit together into a coherent whole yet.

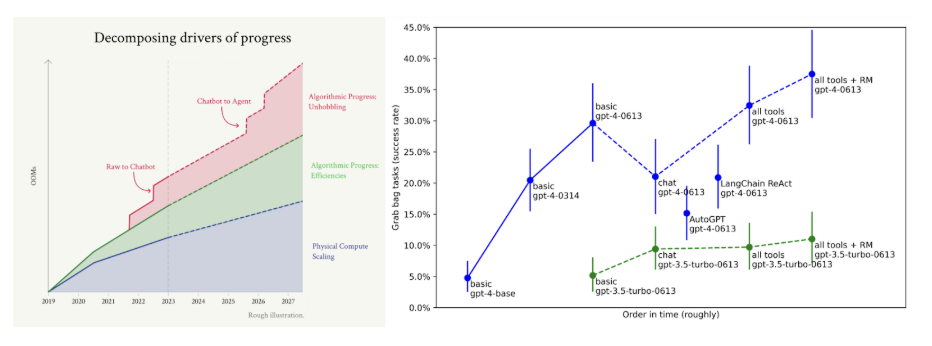

Perhaps the most important word in AI of the last year is unhobbling, coined by Leopold Aschenbrenner in his influential “Situational Awareness” paper. Unhobbling means everything that goes into improving the model after it has been trained, i.e., after the physical compute and algorithmic advances that go into basic model training have already been fixed. Here are the plots from Aschenbrenner and from METR that illustrate how powerful unhobbling is:

Unhobbling includes things like RLHF that turned GPT-3.5 into ChatGPT, or the agentic scaffolding that turned Gemini models into Google Co-Scientist. In my opinion, we are very far from exhausting the hidden potential of even already trained models, let alone models of the near future.

Imagine AI systems that maintain coherent models across months of interaction, that learn new skills without forgetting old ones, that can recognize when they're outside their competence and gracefully seek help. Systems that don't just complete tasks but understand why those tasks matter and can suggest better alternatives. All of this may require completely new foundational models, new architectural ideas to replace Transformers, and new frontiers of capital investment—but it could also be just a set of clever prompts and some engineering tricks away. We just don’t know, and we are very far from scraping the bottom of the barrel.

So perhaps the next breakthroughs will not look like shiny new models that are 100x in the previous leaders in size, cost, and capabilities. They might look like:

- standards for AI agents to communicate and collaborate,

- protocols for continuous learning without catastrophic forgetting,

- architectures that gracefully handle multimodal, multi-timescale information,

- methods for value alignment that do not require perfect specification, or

- infrastructure for AI systems to learn from deployment at scale.

Intelligence, after all, isn't a threshold but a spectrum. We are not waiting for a switch to flip from "narrow AI" to "AGI". We are watching a gradual phase transition, like water approaching boiling—local bubbles of genuine intelligence appearing and disappearing, gradually coalescing into something more.

Although, to be honest, I’m still waiting for that shiny new home robot. Get your robotics together, guys!

.svg)