What if AI systems could plan the future not by generating pixels or manipulating opaque vectors, but by simply writing down what will happen? Meta FAIR's new Vision Language World Models (VLWM) do exactly that: transform planning from a black-box process into readable, editable text in plain English. VLWM predicts state changes in language: "the pan is hot; eggs are setting around edges; ready for seasoning", and can achieve state-of-the-art performance while remaining interpretable and correctable. In this post, we explore how VLWM works, why language-based world models might be the key to trustworthy AI planning, and what this means for the future of human-AI collaboration in robotics and beyond.

Introduction: Planning by Talking

Imagine you're teaching someone to cook over the phone. You can't see what they're doing, and they can't show you the kitchen. So what do you do? You build a shared mental model through words: "First, heat the pan until a drop of water sizzles. Now crack the eggs; you should hear them start cooking immediately." Each instruction comes with an expected outcome, a little prediction about how the world will change.

This is exactly the insight behind Vision Language World Models (VLWM), an interesting new approach from Meta FAIR that brings about a new approach in how AI systems plan and reason about the future. Instead of trying to simulate every pixel of what tomorrow might look like (computationally impossible) or planning in some abstract mathematical space that humans cannot interpret (practically useless), VLWM does something simple but, as it turns out, reasonable enough: it writes down what will happen, step by step, in plain English.

The implications, however, can be quite far-reaching. Current AI systems are already very good at two separate tasks: they can describe what they see ("there is a half-assembled bookshelf with pieces scattered on the floor"), and they can list plausible next steps ("attach the side panels to the base"). But there is a crucial gap between seeing and doing because standard visual language models (VLMs) don't truly understand how actions change the world. They cannot answer a question like: "If I attach panel A before panel B, will I block access to the screws I need later?"

This is the difference between pattern matching and genuine planning. Planning requires a world model—an understanding of cause and effect, of how actions transform states, of what becomes possible or impossible after each step. Humans do this constantly; we run mental simulations for every minute action. Before reaching for a coffee cup, your brain has already predicted the weight, the temperature, how your fingers will grip it, what happens if you miss. We don't just predict actions; we predict their consequences.

Traditional approaches to giving AI this capability have followed two divergent paths, both with fundamental limitations. On one extreme, we have massive models like OpenAI's Sora or NVIDIA's Cosmos that can generate pixel-perfect videos of possible futures. They are accurate but immensely expensive, and such models can only look a few seconds ahead; but for actual planning, minor visual details matter far less than semantic understanding.

On the other side, we have models that plan in abstract latent spaces, sequences of vectors that supposedly represent future world states. These can be efficient and look further ahead, but they are black boxes. When a model says "vector [0.73, -1.2, ...] leads to vector [0.81, -0.9, ...]", nobody (neither human nor a different model, really) can verify if that's sensible, correct errors, or add domain knowledge. It is true planning, but planning in a language no one speaks.

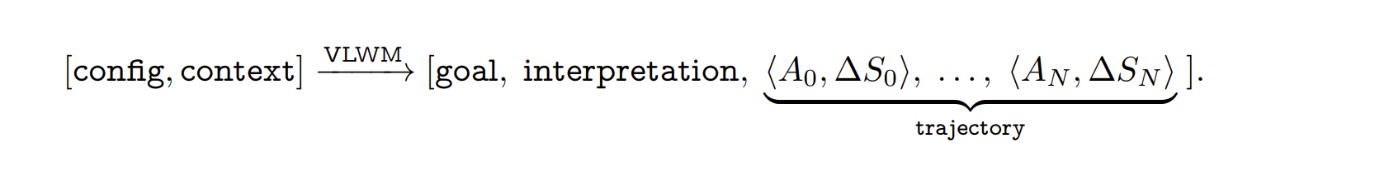

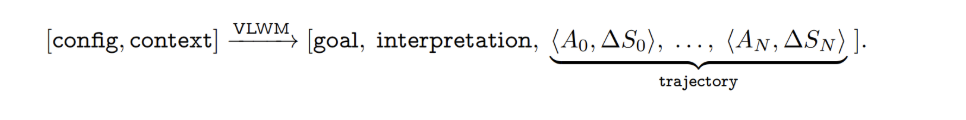

A new paper by Meta FAIR (Chen et al., September 2025) proposes a middle ground with a simple but far-reaching twist: predict the future in language, not pixels or opaque vectors. The authors call it a Vision‑Language World Model (VLWM). Given a short video context, VLWM writes down:

- first the goal and a goal interpretation (explicit initial and desired final states),

- then an interleaved sequence of actions and world‑state changes.

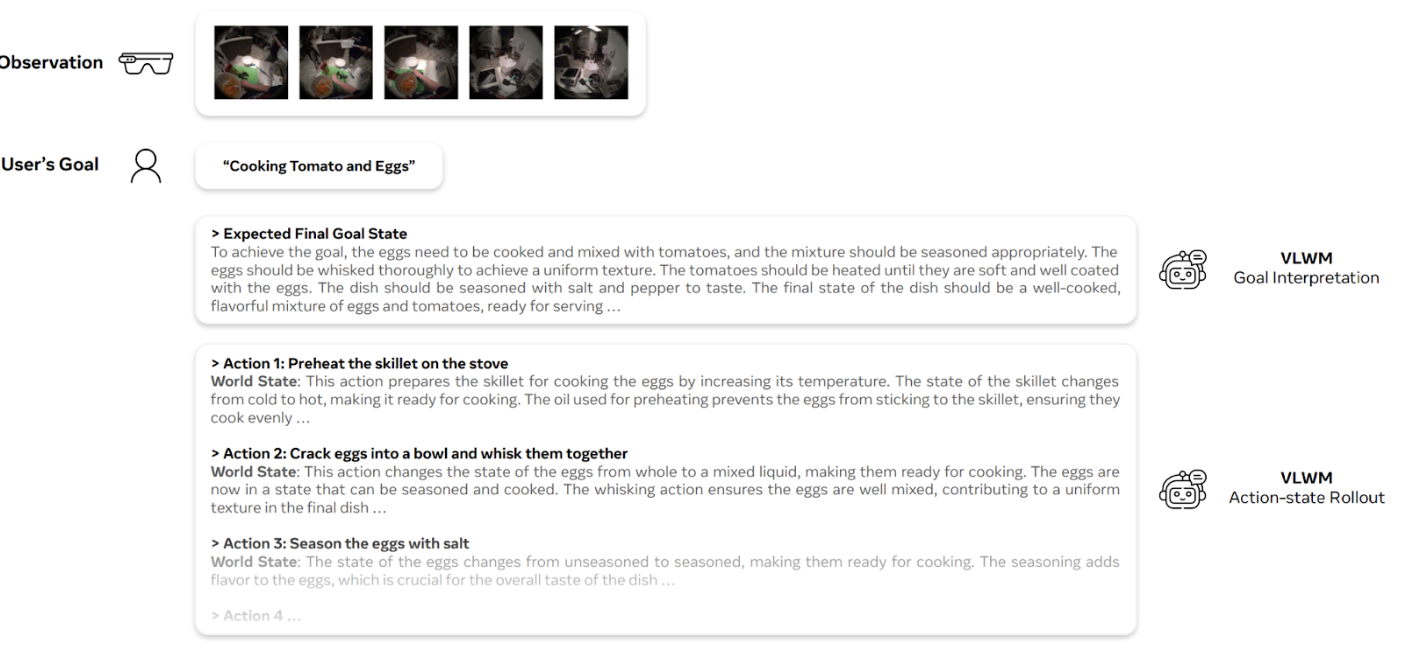

As a result, it produces action-state trajectories and plans for the future expressed in plain English:

Afterwards, it can search over alternative futures with a learned critic to pick the lowest‑cost plan. How exactly does this pipeline work? What makes this approach different from existing research? Let’s find out.

State of the Art: From Seeing to Doing

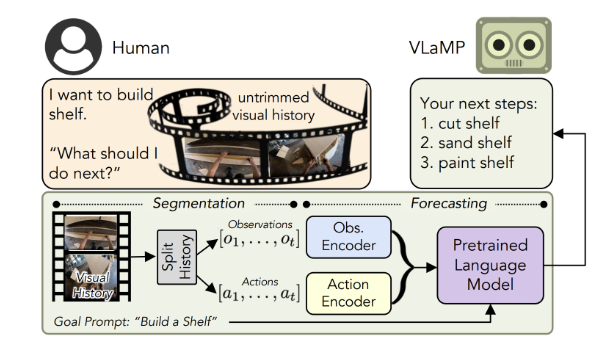

The general problem setting that we are talking about is known as VPA: visual planning for assistance. It tackles a problem we face daily: given what we've seen so far, what should we do next to achieve our goal? Specifically, we show a model what has happened so far in a task video and ask it to predict the next few high‑level steps to reach a goal (e.g., “build shelf”). Early systems treated this as sequence modeling over action labels with a pretrained language model in the loop (Visual Language Model based Planner, VLaMP). Here is an illustration from an earlier work from Meta, by Patel et al. (2023):

Systems of this kind perform planning with a language model, but they are not trying to construct a world model. There is no explicit notion of how actions will potentially change the world, only a language model trained to produce plans.

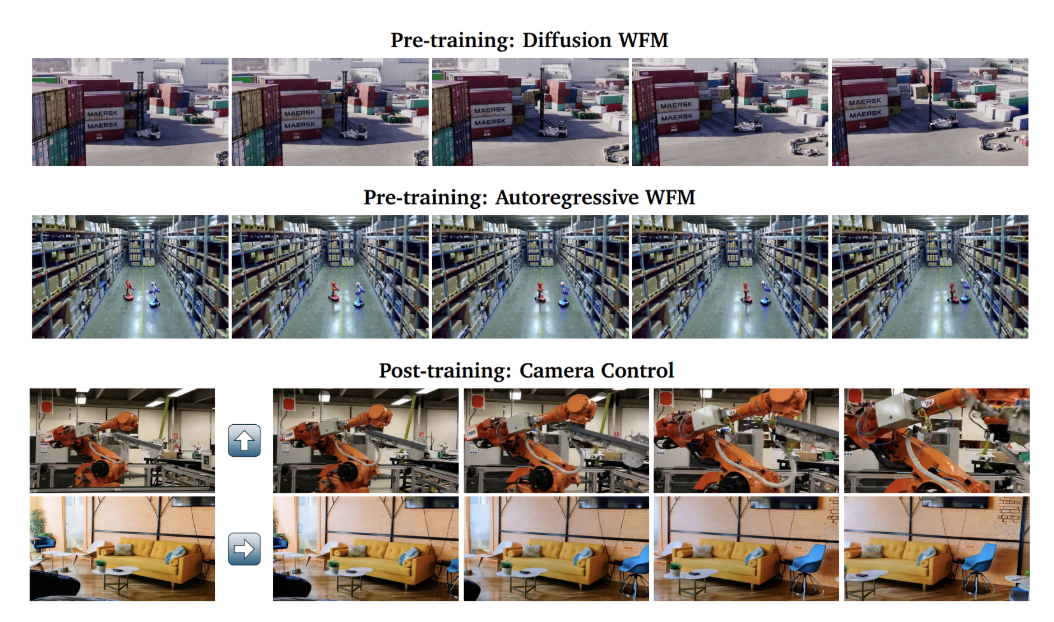

On the other end of the spectrum, we have pixel‑based generative world models that can actually predict the future in terms of individual pixels. You’ve heard of OpenAI Sora which is a world model as much as a video generator. There also exist very impressive physics-based world models such as NVIDIA Cosmos, a world foundation model that trains on a wide variety of datasets to predict the future down to pixel level (NVIDIA, 2025):

But while these models can simulate gorgeous futures, they are also the wrong tool for long‑horizon planning: they are expensive, the problems are always ill‑posed due to partial observability (you can’t have a fully accurate 3D world based on a 2D picture), and the models are designed to do a visually faithful simulation that you don’t need for action selection. These drawbacks combine into the fact that such models can usually only predict the future for a few seconds—not quite the granularity you need to build a shelf or cook some eggs.

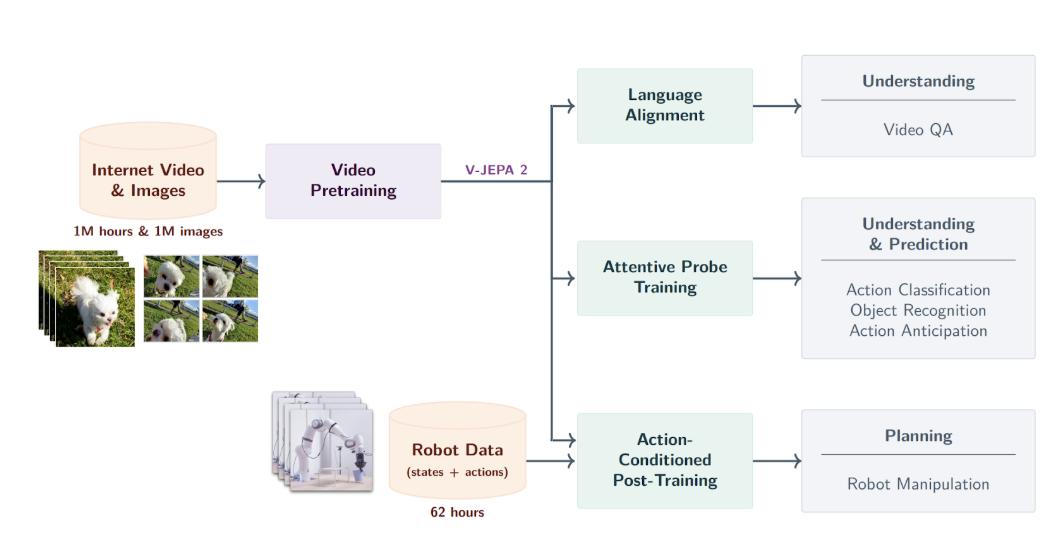

The nearest prior art were JEPA‑style latent world models also previously developed by Meta FAIR (Assran et al., 2025). They predict the future in vector spaces and plan by minimizing a cost to goal, and a part of their training was action-controlled planning based on robotic manipulation:

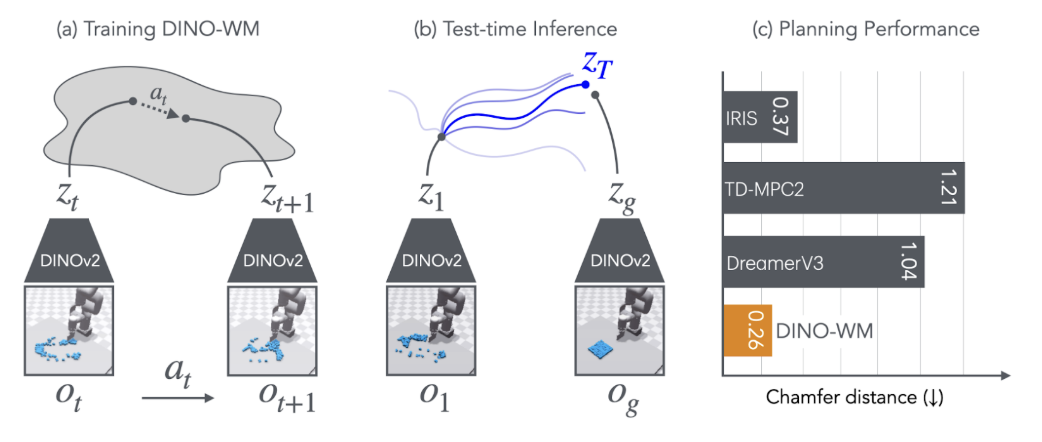

This can be a very efficient approach. One nice recent example in this family was DINO-WM by Courant Institute researchers Zhou et al. (2025); their world model based on DINOv2 embeddings (Oquab et al., 2023) could serve as the basis for successful planning via action sequence optimization:

However, these planners are not interpretable and hence not amenable to modifications; they plan in the space of embeddings, and neither humans nor pretrained LLMs can understand what’s happening there and, e.g., correct the proposed plan.

How can you keep the upsides and get rid of the drawbacks?

Core Idea: A World Model That Writes its State in Language

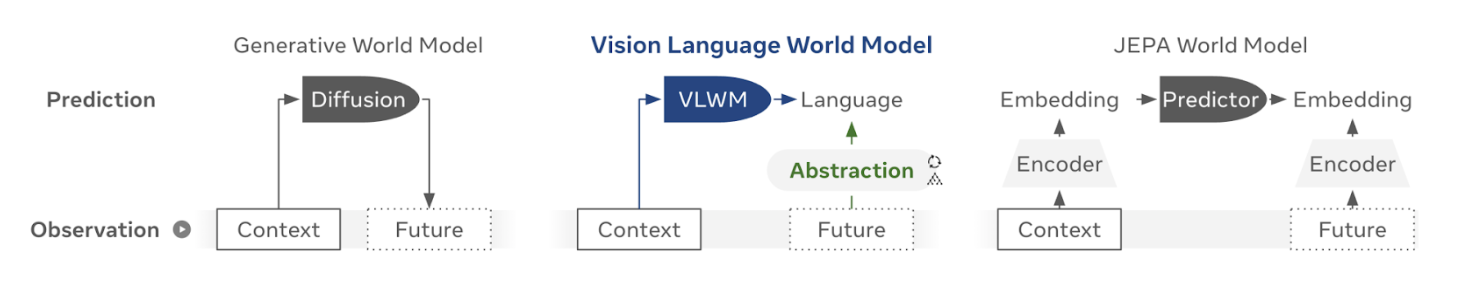

Enter VLWM, a model that supposedly keeps the upsides of cost‑minimizing search, but at the same time makes the state textual, so plans and state changes are human‑readable and easy to manipulate either manually or with already existing LLMs. At a high level, this is the primary difference between VLWM and approaches we’ve seen above (here and below illustrations are from Chen et al., 2025):

The input here consists of a few context frames from a real video and a short goal string, like “cooking tomato and eggs” in the example above. The output has:

- a goal description in natural language,

- a goal interpretation that includes explicit initial and desired final world states,

- and a trajectory of proposed ⟨Action, World‑State Δ⟩ pairs, again in a natural language.

The state sentences explain why this action matters and what exactly it enables, and they are the “reasoning spine” that makes cost‑based search work.

Mathematically, it’s just next token prediction, and VLWM trains by optimizing the cross-entropy loss for this prediction:

This single autoregressive stream teaches both a policy S→A that maps states to actions and an environmental dynamics model ⟨S, A⟩ → S′, both in language; in combines contextual goal inference, proposing next actions, and predicting state dynamics.

But where will the training signal come from? Uncurated videos don’t come with plans, and manual curation is hopelessly too difficult. Chen et al. (2025) solve it in two steps, with two interesting ideas.

Tree of Captions (ToC). Segment a video hierarchically by clustering features, then caption each segment to get a document‑like, multi‑scale summary. This idea comes from two works by HKUST and Meta researchers Chen et al. (2024a; 2024b).

The motivation for ToC is a very practical compute triangle: with a fixed budget, you can’t get (i) high spatial resolution, (ii) long temporal horizon, and (iii) a large, smart VLM to read everything at once. VLWM resolves this by first compressing the video input into a semantically faithful text document, and then doing all heavy reasoning in language space.

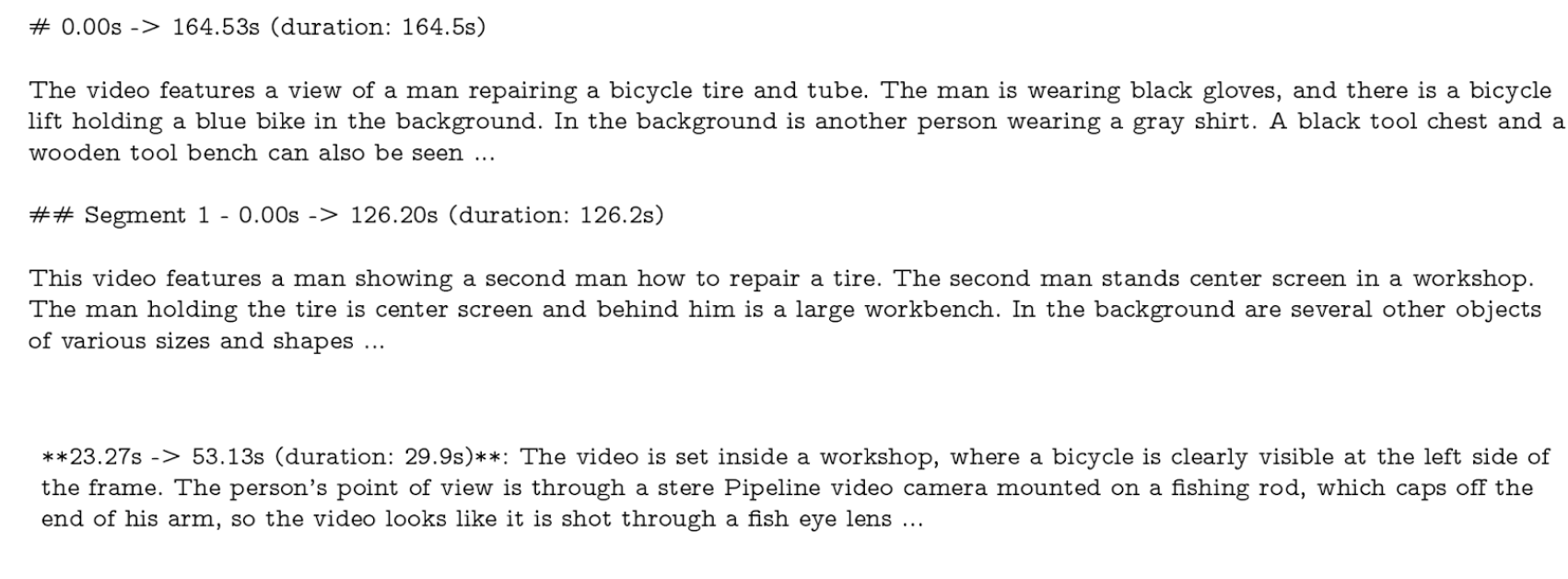

Specifically, Chen et al. (2025) build the tree by hierarchical agglomerative clustering of a per‑frame feature stream, merging adjacent segments that don’t increase within‑segment variance much. As a result, segments become monosemantic windows rather than arbitrary fixed clips. Then you can do captioning and get a multi‑scale “outline” of the video; here’s an example worked out in the paper’s appendix:

Here, depth 0 describes the whole clip, and then further levels are increasingly specific, e.g.:

ToC yields a massive compression ratio without losing task semantics: for instance, the Ego4D dataset, 1.1 TB of raw video, gets reduced to under 900 MB of captions while preserving the scaffolding you need to recover goals and plans. This is precisely what enables the next stage—running a large LLM over long horizons relatively cheaply.

LLM Self‑Refine. From the ToC, a strong LLM produces (and iteratively polishes) the VLWM’s four‑part target: goal description, goal interpretation (initial and desired final states), action list, and world‑state paragraphs that explain the consequences of each action.

This is trained with the Self-Refine procedure introduced by Madaan et al. (2023). The loop is deliberately opinionated. The first pass drafts the four components; subsequent passes critique and revise their own output against a prompt that insists on: (a) imperative, high‑level actions that meaningfully advance progress (no presentational steps), (b) state paragraphs as an information bottleneck—what changed, why it matters, what it enables next—and (c) a goal interpretation that spells out preconditions and a concrete success state without leaking actions.

Here is a sample critique from the paper:

“Prepare the ingredients for Zucchini Curry.” in the draft could be broken down into more specific actions like “Wash, peel, and chop the zucchini” and “Chop the onions and tomatoes.”

The state change after sautéing the onions, ginger, garlic, and green chilies could include more details about how this step affects the overall flavor and texture of the curry.

The action of “Display the Zucchini Curry in a bowl” is more of a presentational step rather than a meaningful action that advances the task progress, so it should be removed from the steps.

This pipeline scales quite well: VLWM was trained on about 180K videos with 21M caption nodes, leading to 1.1M video‑derived trajectories with 5.7M steps; the authors also converted another 1.1M text‑only reasoning paths from the NaturalReasoning dataset (Yuan et al., 2025) into action‑state trajectories, thus bringing the total number of trajectories to about 2.2M. The paper has critic ablations which show that this structure matters: removing goal interpretations or state paragraphs degrades performance, especially out of domain, so the explicit state text is doing real work.

System-1 and System-2

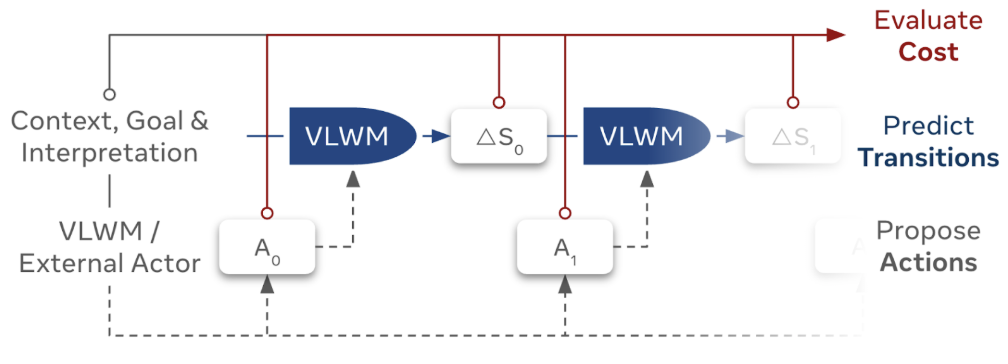

But the story doesn't end with simple text generation. VLWM runs in two gears, which the paper, following a psychological convention, calls System-1 (immediate planning) and System-2 (far out planning).

System‑1, called Reactive Planning in the paper, is basically just text decoding. At test time, given the config, context, and goal description, you can decode the trajectory up to the <eos> token according to the general idea we’ve seen above:

This is kind of like an internal chain of thought for the VLWM; it’s fast and often good enough in‑domain.

To put it into context of other work, System‑1 is the most principled descendant of the VPA/VLaMP family: it is still sequence prediction, but with a learned notion of state change in the loop, not just action names. That alone explains much of the VPA SOTA in Table 2 (especially at T=3). But greedy autoregressive generation is also where any dataset shortcuts leak through: greedy decoding commits to early choices with no chance to reconsider.

System‑2, called Reflective Planning, aims to perform cost‑minimizing search and adds the two missing ingredients for reasoning about alternatives:

- rollouts for multiple possible futures

- that are scored with a critic that measures semantic distance to goal.

Then the model picks the lowest‑cost plan according to the critic’s scores:

This is LeCun’s “world model + energy/cost” idea applied to textual states. And this architecture has two main components:

- actor / proposer that suggests candidate next steps or partial plans; it can be the VLWM itself via high-temperature (diverse) sampling or an external LLM restricted by domain constraints; the VLWM then rolls out each candidate to predict the resulting states;

- critic (cost model), a small LM which is trained in a self‑supervised way to assign lower cost to valid progress and higher cost to distractors or shuffled steps.

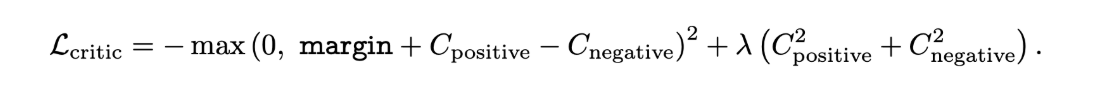

To train the critic, the authors use a margin‑ranking objective across three pair types: ⟨good, base⟩, ⟨base, bad⟩, ⟨base, shuffled⟩, with a more or less standard triplet loss

It is in essence self-supervised training:

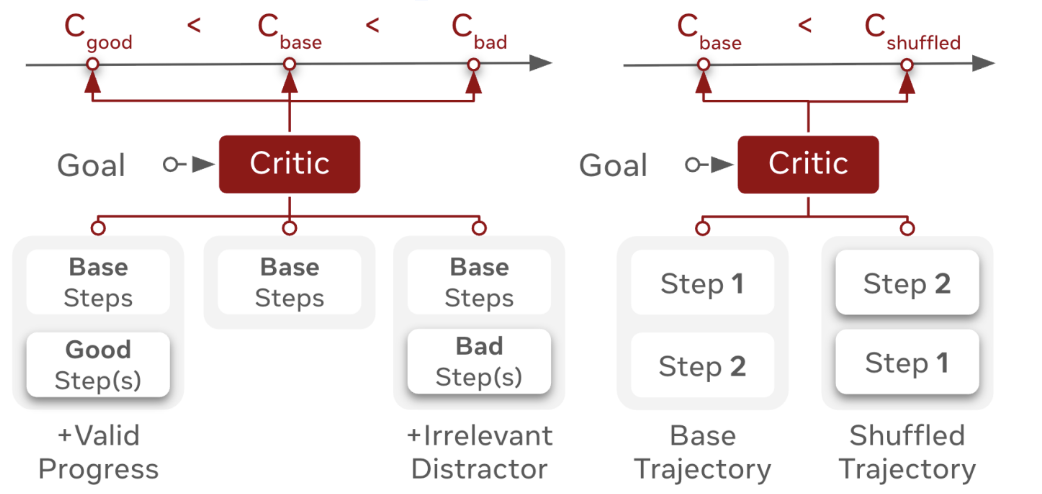

How do you evaluate a critic like this? The authors created a separate benchmark called PlannerArena (Chiang et al., 2024) similar in spirit to LMArena: human evaluators are choosing the better plan among two generated by unknown models. Here is a sample task together with a table of results from the paper:

As you can see, empirically on PlannerArena, System‑2 beats System‑1 by a wide margin (Elo 1261 vs 992) and outperforms strong MLLMs; on VPA, it yields consistent gains on longer horizons. Even as a standalone model, the critic has achieved SoTA 45.4% on the WorldPrediction‑PP dataset. These are exactly the behaviors we want from a cost function: it favors coherent, goal‑directed progress, penalizes irrelevant or incorrectly ordered steps, and generalizes beyond training domains.

Conclusion: The Good, The Bad, and The Open

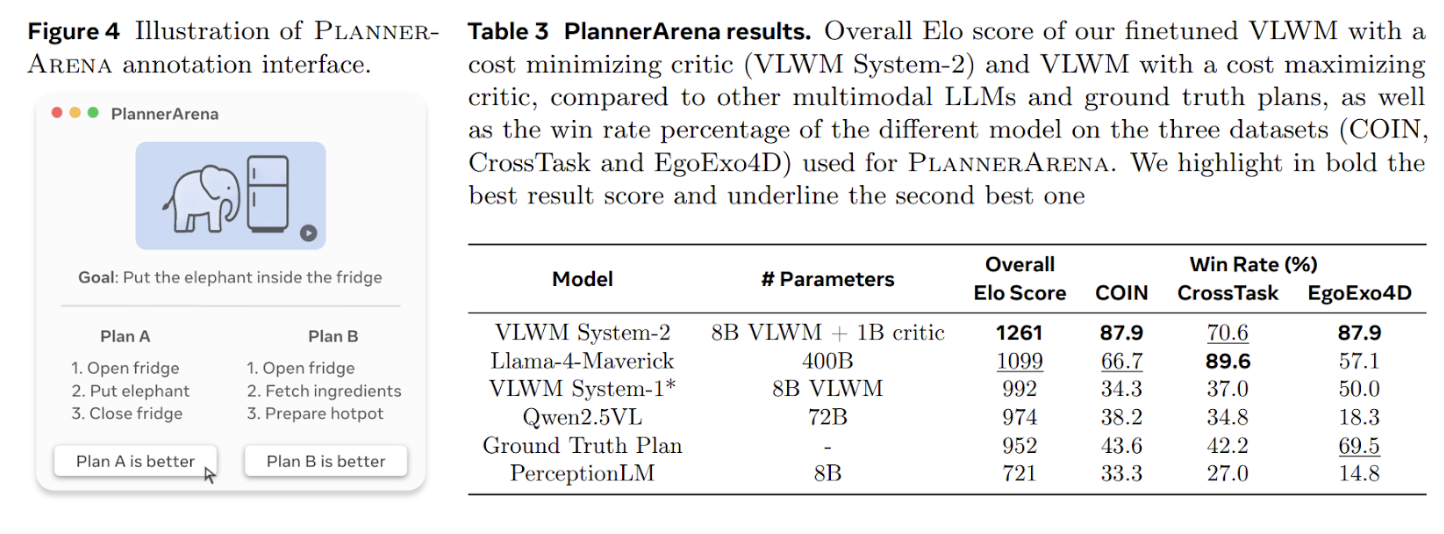

As you would expect, VLWM beats previous state of the art approaches on VPA (visual planning for assistance) benchmarks such as COIN (Tang et al., 2019) and CrossTask (Zhukov et al., 2019):

Moreover, it beats SoTA on the RoboVQA benchmark (Sermanet et al., 2024) on visual question answering in robotic context, and its critic. a mere 1B model, outperforms 8B models in tasks like recognizing goal achievement and world state prediction based on provided plans, the WorldPrediction benchmark (Chen et al., 2025).

But every new paper has to beat some benchmarks, otherwise it wouldn’t be published. So what are the main advantages and this approach that may make it into an important milestone?

I have sung praises to the advantages of VLWM in this post, so the first part is just a summary. Here are the main new advantages:

- a crisp abstraction for planning: treating the world state as text and unrolling plans as interleaved ⟨action, Δstate⟩ pairs gives you a planning interface that’s interpretable, compositional, and cheap to roll out; it fits the “world‑model + cost minimization” picture while avoiding pixel generation and opaque latents;

- a scalable supervision pipeline: the Tree‑of‑Captions → Self‑Refine route converts raw, uncurated videos into text usable for planning with explicit goal interpretation and state sentences; the paper shows that this pipeline can scale to hundreds of thousands of videos and millions of trajectories;

- System‑2 that actually helps: the search procedure over future states with a language critic feels like a good idea, and indeed human raters prefer VLWM’s critic by a wide margin in PlannerArena;

- practical engineering tricks: the Tree-of-Captions is DFS‑linearized that keeps LLM context length under control; goal interpretation as an explicit text block makes the cost function well‑posed for the critic (and there are ablations that show that removing interpretations or state text hurts, especially in out-of-domain situations).

Still, important questions and problems remain:

- how faithful is the text state? the state paragraphs come from Self‑Refine over caption trees, and while they look structured and useful, they are still derived text that can contain errors or omissions, which will propagate into training targets through the ToC → Self‑Refine pipeline;

- evaluation is relatively small and has questionable quality: PlannerArena is a great complement to VPA, but the study is of modest size, and quality of generated plans is generally very weak;

- moreover, the PlannerArena dataset has uncovered that ground truth plans in the COIN and CrossTask datasets are weak, “barely better than the worst performing models, respectively 43.6% and 42.2%, highlighting a major issue with current procedural planning datasets”; this means that evaluation on these datasets can be misleading, but it looks like we don’t have anything better—more work is needed in visual planning evaluation;

- critic generalization depends on the state text: the critic shines on in‑distribution action‑state trajectories, but performance drops on action‑only OOD plans; this means that the explicit state helps and is a key component (which is good), but it is also a reminder that the method expects that text state to exist at test time and be faithful to the situation, which may be harder than it looks;

- language states do not scale to more involved tasks: coming from the last problem, we note that while text is excellent for task semantics (“pan is hot; eggs seasoned”), it may be too coarse for fine geometry or safety‑critical constraints without additional lower‑level controllers; VLWM stays at high‑level planning but bridging it, say, to robotic dexterous control would be hard not just computationally but conceptually.

To put these limitations in perspective: VLWM can plan how to cook an omelet but would struggle with the precise wrist movements needed to flip it. It can describe that “the shelf is assembled” but might miss that one screw is loose since it wouldn’t make it into a general scene description. These are not fatal flaws but rather boundaries of what language-based planning can currently achieve.

In total, VLWM is a thoughtful and well‑engineered step toward interpretable world‑model planning. It keeps the JEPA‑style cost‑minimization narrative but makes the state textual, which enables explicit goal interpretations, state tracking, and cheap rollouts. The empirical gains and the critic’s behavior are convincing, but there are big open items: fidelity (how accurate the derived text states are), coverage (how to operate when state text isn’t available at test time), and generalization to lower‑level control.

But still, it looks like Chen et al. (2025) made a very significant step forward. Perhaps most intriguingly, VLWM opens the door to human-AI collaborative planning. Since plans are in plain English, domain experts could review and edit them before execution; imagine a surgeon correcting a surgical robot's plan or a chef tweaking a cooking assistant's recipe. This interpretability might be the key to deploying AI planners in high-stakes environments where we need to understand and trust their reasoning.

VLWM also brings the research community a solid foundation to build on. Since the model sizes are very modest (the critic is a 1B-parameter LLM), if the authors open-source their work as promised it could become a common starting point for all assistance planners. While Meta gets a lot of criticism for their extra openness and lack of safety in their frontier LLMs (well deserved criticism, in my opinion!), in a domain like this one, where the AI models are still relatively weak, openness can only be a good thing, enabling further progress in planning and robotics in general. Let’s wait and see what the community builds on this.

.svg)