Have you ever tried to explain a complex idea, felt like you knew exactly what you wanted to say, but struggled to find the right words? This (sometimes frustrating) gap between having an idea and expressing it in language is something we all experience. And usually, it’s productive rather than frustrating: we can think better since we are not forced to think in words. Today, we will see how this idea is becoming an important direction in AI research.

Introduction

Imagine you are trying to explain a complex idea to a friend. You know exactly what you want to convey—there is a complete thought sitting in your mind, with all of its meaning and implications. But as you begin to speak, you have to serialize that thought into words, one after another, fitting the meaning through the narrow bottleneck of language. Sometimes the words flow smoothly. Other times you stumble, backtrack, or realize that the current sentence doesn’t go anywhere and has to be reworked. The frustrating gap between having an idea and expressing it in language is something we all experience.

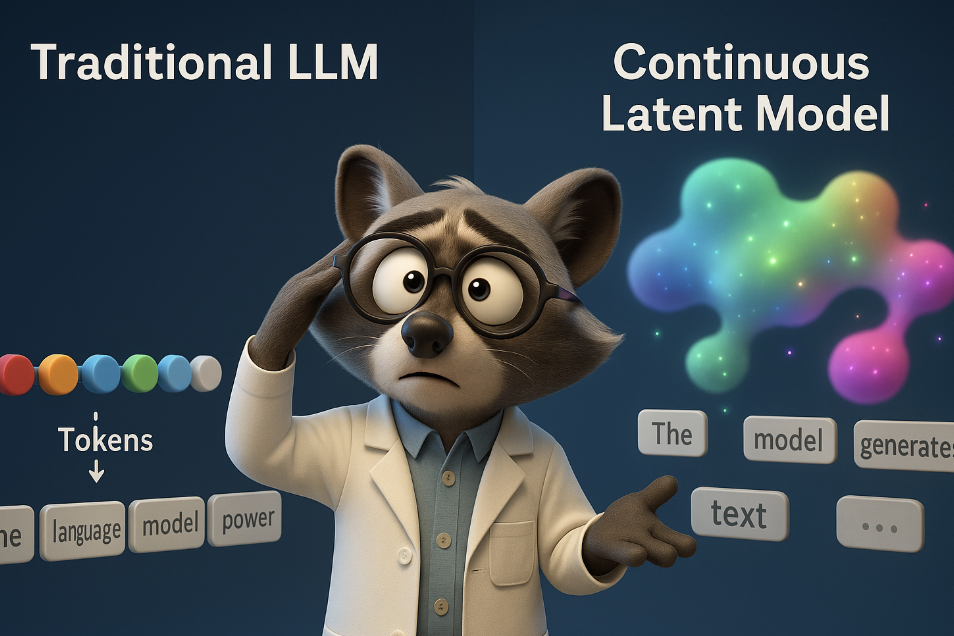

Now imagine being required to think in the same serial, word-by-word fashion. Not just speak that way, but actually reason that way, not being able to hold multiple possibilities in superposition or sketch a high-level plan without committing to specific words. Imagine that you have to write down every intermediate thought in explicit language before moving to the next step. It would be cognitively suffocating. And yet, this is exactly how most large language models work today.

Most large language models today are, in essence, sophisticated word predictors. They generate text one token at a time, predicting "the," then "cat," then "sat," then "on"... This sequential march through the vocabulary space has worked remarkably well (after all, here we are in 2025 with impressively capable LLMs), but it comes with some constraints. For example, a language model cannot “sketch out” a high-level plan before diving into details (let’s not go into reasoning models just yet). It can't compress multiple ideas into a single thought and manipulate them together. In a way, It is perpetually stuck in the granular world of individual words, or, more exactly, tokens, i.e., word fragments.

This approach has worked remarkably well: we wouldn't be where we are in 2025 without it. But it comes with fundamental constraints that are becoming increasingly apparent as we push these models toward more complex reasoning and longer-horizon tasks. And interestingly, it's quite different from how human cognition seems to work. Neuroscience suggests we often think in non-linguistic abstractions, with language being more of an interface layer than the substrate of thought itself.

But what if we could let models operate at higher levels of abstraction? What if, instead of predicting words, they could predict meanings, i.e., continuous vectors representing entire sentences, paragraphs, or concepts? What if they could reason through multiple steps internally, in continuous latent space, only surfacing to language when they need to communicate their conclusions?

This is the core idea behind continuous latent space language models, and after years of false starts and lukewarm results, we are suddenly seeing genuine breakthroughs. The convergence of three developments has made this possible:

- high-quality pretrained embedding spaces that actually capture semantic meaning across languages,

- autoencoders that can compress text chunks into continuous vectors with near-perfect fidelity, and

- new training methods such as diffusion models, energy-based learning, and curriculum techniques, that can handle continuous distributions well.

In this post, we explore several approaches that use these advances, understand what makes them tick, and then zoom out to consider the bigger picture: what does it mean to move beyond tokens? What advantages can it bring—and are they worth it?

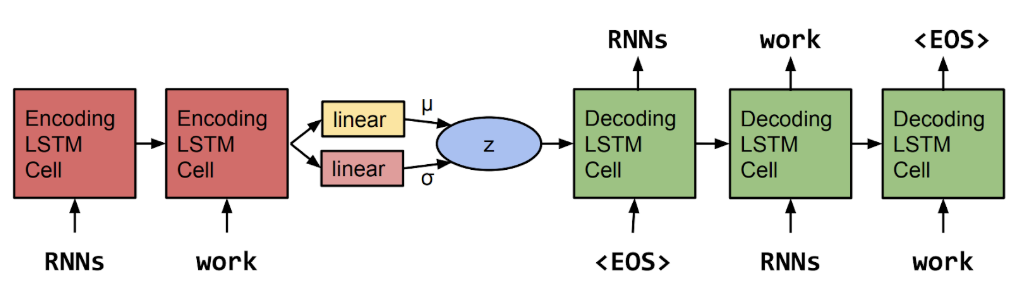

The Old Dream: VAEs and Sentence Embeddings

The idea isn't new. Almost ten years ago, Bowman et al. (2016) trained a variational autoencoder (VAE) that could encode entire sentences into continuous vectors, then decode them back. It was back in the good ol’ days before Transformers, so the encoder and decoder were both RNNs:

They even showed you could interpolate between sentences in this learned space, gradually morphing completely different sentences into one another by walking through the vector space:

It was an elegant approach, and it suggested that text really could have a smooth, continuous representation.

But there was a catch. Actually, several catches. The model often suffered from "posterior collapse", when the decoder would learn to ignore the latent vector entirely and just generate generic sentences. Training was brittle and easy to break. And most importantly, these models could not compete with good old-fashioned token-by-token language models on practical tasks. The dream was mostly forgotten as Transformers conquered the NLP world.

But now, in 2024-2025, we are suddenly seeing a resurgence of this old idea. What changed? Basically, three things:

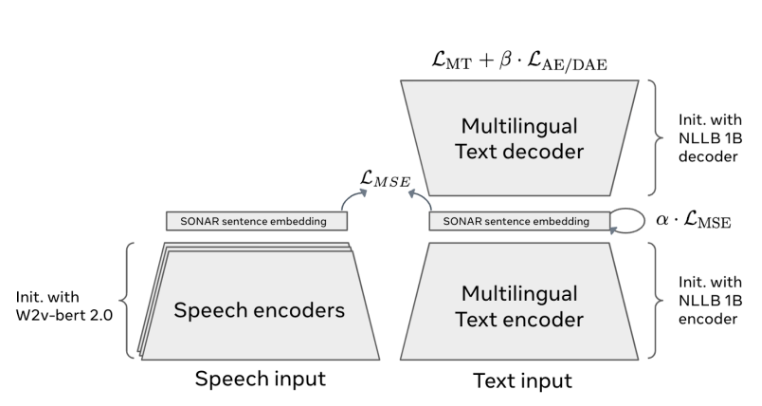

- better embedding spaces: now we have pretrained sentence encoders (like SONAR) that actually work across 200+ languages;

- high-fidelity compression: now we have autoencoders that can compress multi-token chunks with 99.9%+ reconstruction accuracy;

- new training methods: diffusion models, energy-based learning, and other techniques that do not use traditional likelihood-based objectives and are better suited for continuous latent spaces.

In this post, we consider three recent approaches that each tackle a different piece of the puzzle.

Large Concept Models: Teaching Machines to Think in Sentences

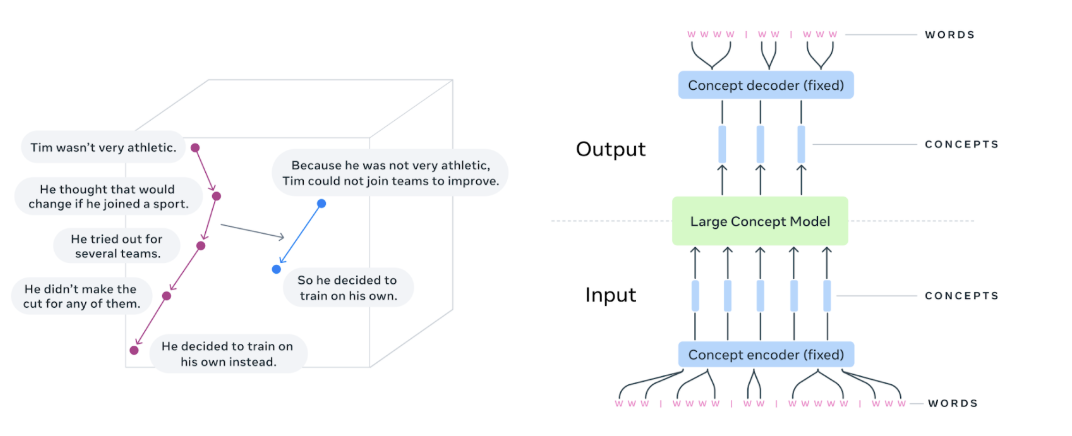

Meta's Large Concept Model (LCM; LCM team, 2024) takes a delightfully straightforward approach: why not just build a language model that operates on sentence embeddings instead of tokens?

Here's how it works. They start with SONAR (Duquenne et al., 2023), a pretrained encoder that can map sentences from any of 200 languages (and even speech!) into a shared embedding space. Each sentence becomes a point in this high-dimensional semantic space. Here is the general illustration of how SONAR is trained (Duquenne et al., 2023):

Then they train a Transformer, but instead of predicting the next token, it predicts the next sentence embedding. The model sees a sequence of concept vectors (one per sentence) and outputs the concept vector for the next sentence. Finally, SONAR's decoder converts that predicted vector back into actual text in whatever language you want. Here is the LCM’s main illustration, with the summarization problem conceptualized in sentence latent space on the left and the model structure on the right (LCM team, 2024):

Think about what this means. The model is still doing sequence modeling, but now it is one level up the abstraction hierarchy. Instead of "the → cat → sat → on → the → mat", it's more like "setup_scene → introduce_character → describe_action". Each prediction carries much more semantic weight.

They tried several ways to make this work:

- direct regression: just predict the embedding with MSE loss (treat it like regression);

- diffusion: use a diffusion model to gradually denoise a random vector into the target embedding;

- quantization: discretize the embedding space into codes and predict those.

After scaling to 7B parameters and training on 2.7 trillion tokens worth of data, they got some impressive results. The model showed strong zero-shot cross-lingual transfer: it’s no problem to train on English and evaluate on Korean. It produced less repetitive text than token-level models, probably because it's harder to get stuck in local loops when you're operating on sentence-level meanings. And it particularly excelled at tasks like summarization, where you need to capture high-level content.

However, LCM still represents continuous latent space modeling in its early days. A 7B LCM doesn't beat a 70B token-level model on everything. Information can get lost when you compress to the level of whole sentences: fine-grained details, exact word choices, subtle grammatical points. And the embedding space itself (SONAR), while excellent, isn’t perfect, and any biases or limitations of the embedding space will be inherited by the LCM.

But with this work, the paradigm shift is becoming real: we are no longer bound to generating word by word. Let us see some other manifestations of this.

CALM: Speed Through Chunking

While LCM focuses on semantic abstraction, CALM (Continuous Autoregressive Language Model) from Tencent and Tsinghua researchers Shao et al. (October 31, 2025! fresh out of the oven) tackles efficiency. Their basic idea is simple: generating one token at a time is slow, so what if we could generate chunks of several tokens at once?

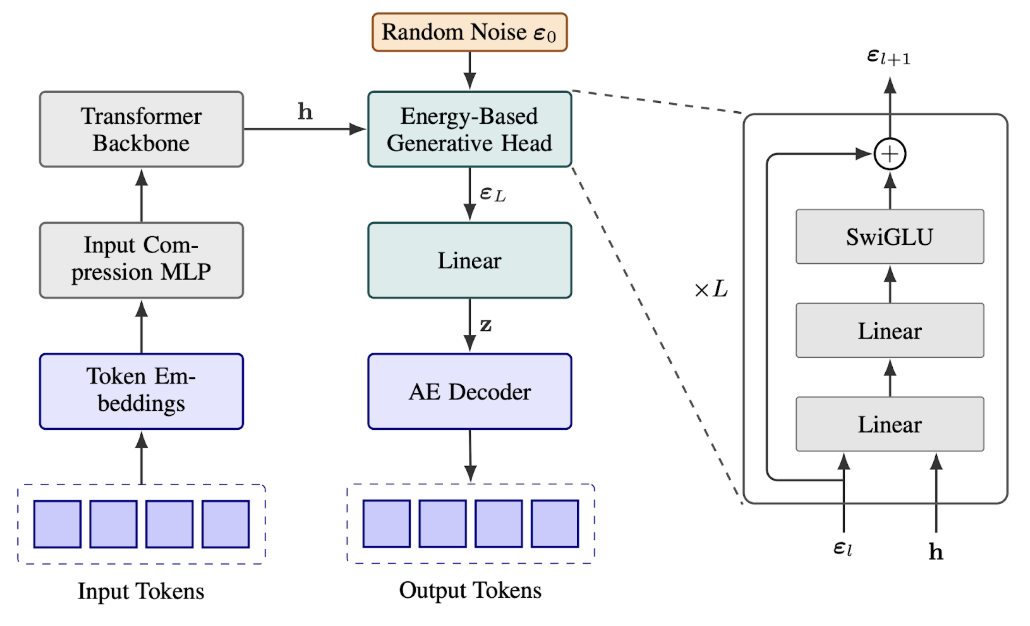

On the face of it, it’s a pretty standard idea: e.g., DeepSeek-v3 already was generating multiple tokens to speed up generation. But CALM introduces a clever novel architecture, where they train three distinct components.

- A high-fidelity autoencoder that compresses K tokens (typically K=4) into a single continuous latent vector, and can decode it back nearly perfectly. The reported reconstruction accuracy is >99.9%, meaning that almost no information is lost in this compression.

- A Transformer backbone, which, instead of predicting the next token, predicts the next latent vector. The input isn't raw latents from the previous step (they found that led to lower performance), but rather the actual K tokens that were decoded, re-embedded and compressed through a small MLP.

- An energy-based predictor: this is the tricky part—how do you predict a continuous vector? They use a generative head that takes the Transformer's hidden state plus random noise and produces the next latent. This is trained with an energy-based loss (specifically, the energy score) rather than traditional cross-entropy.

Here is the full illustration of the model structure by Shao et al. (2025):

The result is a closed loop: text → latent → text → latent, operating entirely in continuous space between steps.

This indeed leads to a substantial speedup. With K=4, the authors report ~44% reduction in training FLOPs and ~34% reduction in inference FLOPs compared to standard transformers, with performance matching token-level models of equivalent size. Essentially, they're generating four tokens per forward pass instead of one, which directly translates to faster generation.

Success of this approach is probably directly related to near-lossless compression: the 99.9% reconstruction accuracy of their autoencoder appears to be crucial. Earlier attempts at chunk-based generation failed partly because the compression was lossy, while CALM works because operating in latent space doesn't sacrifice fidelity. The other side of this coin is that you can’t really pack too much information into the latent vector. The sweet spot seems to be K=4: with longer token sequences (K=8 or K=16) you start getting semantic drift as errors accumulate in the continuous space and lead to incoherent text after many steps.

There is one additional challenge here: since CALM doesn't output token probabilities, you can't use standard perplexity for evaluation. So Shao et al. introduced a new metric called BrierLM based on the Brier score, which measures the quality of predicted distributions without needing explicit probabilities. While I’m not sure it’s the best metric possible, this too is uncharted territory: we have to reinvent how we evaluate language models.

Coconut: Thinking Without Words

And now for something even more radical: what if the model doesn't need to express its reasoning in language at all?

When we use chain-of-thought reasoning, we are essentially forcing the model to "think out loud", generating intermediate reasoning steps as text. But human reasoning isn't always verbal. Close your eyes and solve a spatial puzzle like putting a rat through a maze—where is the linguistic component? Often, there isn't one. Neuroscience suggests the brain's language centers can be inactive during complex reasoning tasks.

Coconut (Chain of Continuous Thought; work by Meta researchers Hao et al., 2024) explores this in LLMs. Instead of generating reasoning steps as tokens, the model performs reasoning iterations in a continuous latent space. It maintains a hidden state representing its "current thought," feeds it back into itself multiple times to refine the reasoning, and only converts to language at the very end to output the answer.

The implementation uses the Transformer's last hidden layer as the latent thought representation. During training, they use curriculum learning to gradually shift from normal chain-of-thought (where intermediate steps are visible text) to pure latent reasoning (where steps happen internally). Special tokens signal when the model should stay in “latent mode” versus when to decode to text:

The results are convincing: on complex logical reasoning tasks, Coconut outperformed standard chain-of-thought techniques while often needing fewer total steps. Why? One theory is that a continuous latent state can represent a superposition of multiple reasoning paths simultaneously. Unlike text, which forces you to commit to one specific phrase, a vector can encode several possibilities at once. This enables something like breadth-first search in reasoning, exploring many branches in parallel before converging on an answer.

There's also a practical efficiency benefit: if the model does 10 reasoning steps internally but only outputs 1 answer token, you are only “charged” for 1 token worth of generation.

While Coconut is still not the best LLM around, and it appears like this direction has not become dominant over the last year, it is still a compelling proof of concept: latent spaces can structure not just generation efficiency, but the reasoning process itself.

The Safety Elephant in the Room

But there is something we need to talk about, and it's not all excitement and speedups.

When models reason in continuous latent spaces, they become fundamentally less interpretable. With traditional chain-of-thought, we can read exactly what the model is thinking: "First, I'll check if the number is prime. It's divisible by 3, so it's not prime. Therefore..." We can audit this reasoning, spot errors, understand failure modes, and detect potentially harmful reasoning patterns. With Coconut-style latent reasoning, we lose all of that. The model's "thoughts" are now 1024-dimensional vectors that no human can directly parse.

This isn't just a slight disadvantage—it may be a key deficiency at the heart of AI alignment and safety research. A significant portion of current alignment work relies on being able to observe and shape model reasoning. We use techniques like process supervision (rewarding correct reasoning steps, not just correct answers), we train models to explain their thinking, we use interpretability tools to understand what happens inside the model. Constitutional AI and related approaches depend on being able to intervene in the reasoning process. If reasoning moves into an opaque latent space, many of these techniques become much harder or impossible.

Consider the implications for detecting deceptive behavior. One major safety concern is that advanced AI systems might learn to behave helpfully during training while planning harmful actions for deployment - so-called "deceptive alignment" (Hubinger et al., 2019). Our main defense against this is transparency: if we can see the model's reasoning process, we can potentially spot when it's reasoning about how to deceive us. But if the model reasons in continuous vectors, this defense evaporates. The model could be doing arbitrarily complex planning in latent space, and we'd have no direct way to know what it was planning. As Anthropic's work on "sleeper agents" (Hubinger et al., 2024) demonstrated, models can learn to behave differently in training versus deployment in ways that are hard to detect—and that's with current interpretable models! Latent reasoning could make such detection vastly harder.

The mechanistic interpretability community has made remarkable progress in recent years understanding how Transformers work internally: identifying circuits for specific behaviors, understanding how models store and retrieve factual knowledge, even finding "truth-telling" directions in activation space (Dunefsky et al., 2024; Golimblevskaia et al., 2025; see also the Transformer Circuits Thread). But this work fundamentally depends on being able to trace information flow through the model. When reasoning happens in a compressed continuous space that the model iteratively refines, the interpretability becomes a much harder challenge. We might need entirely new tools to understand what's happening in these latent iterations.

Chain-of-thought reasoning provides at least some degree of explainability: “Here's what the model thought, step by step”. Latent reasoning offers none. We'd be building more powerful systems that are at the same time more opaque. It doesn’t mean that we shouldn't pursue continuous latent space models, but it does mean we need to think carefully about the tradeoffs.

Conclusion

Let us summarize. In this post, we have discussed three complementary directions that emerge along similar lines:

- LCM provides semantic abstraction: think in concepts, not words;

- CALM tries to achieve generation efficiency: produce chunks, not tokens;

- Coconut changes reasoning structure: plan internally and continuously, speak externally.

These could potentially be combined. Imagine a model that:

- uses Coconut-style latent reasoning to plan out a response,

- generates a sequence of LCM-style concept vectors, and at the same time

- employs CALM-style chunk generation to rapidly convert every concept to text.

This model would be a genuine hierarchical system operating on multiple levels of abstraction simultaneously; multi-scale language modeling, if you will.

Naturally, many problems still remain. First, training is harder: these models require specialized techniques such as energy-based learning, curriculum training, or diffusion objectives, which are harder to use and not as well understood as standard cross-entropy. Second, what about human interaction: how do you prompt a model that thinks in latent vectors? Text prompts work because we share the token space with the model. Latent space prompting is uncharted territory.

But the potential upside is also quite significant. The token-by-token paradigm has dominated NLP for years because it worked. But we are finally at a point where we can credibly challenge it: high-quality embedding spaces already exist, autoencoders can compress with minimal loss, and training techniques that can handle continuous distributions have been developed for a while now.

We are not replacing token-level models overnight; they will remain dominant for a while, and hybrid approaches are likely. But it is a possible path forward: language models are learning to think in larger units, to reason without verbalizing every step, and to operate in continuous semantic spaces that might more closely resemble how humans actually process meaning. Just please, beware the safety warnings.

.svg)